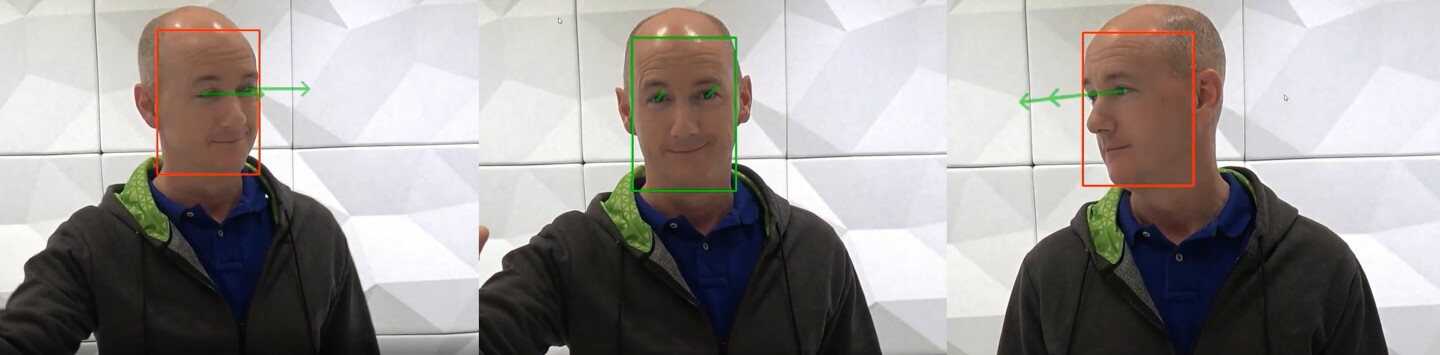

Gaze Estimation

The GazeNet models detects a person’s eye gaze point of regard (X, Y, Z) and gaze vector (theta and phi). The eye gaze vector can also be derived from eye position and eye gaze points of regard.

GazeNet is a multi-input and multi-branch network. The four input for GazeNet consists: Face crop, left eye crop, right eye crop, and facegrid. Face, left eye, and right eye branch are based on AlexNet as feature extractors. The facegrid branch is based on fully connected layers.

GazeNet use case

The training algorithm optimizes the network to minimize the root mean square error between predicted and ground truth point of regards. This model was trained using the Gaze Estimation training app in TAO Toolkit v3.0.

The primary use case for this model is to detect eye point of regard and gaze vector. The model can be used to detect eye gaze point of regard by using appropriate video or image decoding and pre-processing.

The datasheet for the model is captured in its model card hosted at NGC.