Visualization

Holoviz provides the functionality to composite real time streams of frames with multiple different other layers like segmentation mask layers, geometry layers and GUI layers.

For maximum performance Holoviz makes use of Vulkan, which is already installed as part of the Nvidia GPU driver.

Holoscan provides the Holoviz operator which is sufficient for many, even complex visualization tasks. The Holoviz operator is used by multiple Holoscan example applications.

Additionally, for more advanced use cases, the Holoviz module can be used to create application specific visualization operators. The Holoviz module provides a C++ API and is also used by the Holoviz operator.

The term Holoviz is used for both the Holoviz operator and the Holoviz module below. Both the operator and the module roughly support the same features set. Where applicable information how to use a feature with the operator and the module is provided. It’s explicitly mentioned below when features are not supported by the operator.

The core entity of Holoviz are layers. A layer is a two-dimensional image object. Multiple layers are composited to create the final output.

These layer types are supported by Holoviz:

image layer

geometry layer

GUI layer

All layers have common attributes which define the look and also the way layers are finally composited.

The priority determines the rendering order of the layers. Before rendering the layers they are sorted by priority, the layers with the lowest priority are rendered first so that the layer with the highest priority is rendered on top of all other layers. If layers have the same priority then the render order of these layers is undefined.

The example below draws a transparent geometry layer on top of an image layer (geometry data and image data creation is omitted in the code). Although the geometry layer is specified first, it is drawn last because it has a higher priority (1) than the image layer (0).

The operator has a receivers port which accepts tensors and video buffers produced by other operators. Each tensor or video buffer will result in a layer.

The operator autodetects the layer type for certain input types (e.g. a video buffer will result in an image layer).

For other input types or more complex use cases input specifications can be provided either at initialization time as a parameter or dynamically at run time.

std::vector<ops::HolovizOp::InputSpec> input_specs;

auto& geometry_spec =

input_specs.emplace_back(ops::HolovizOp::InputSpec("point_tensor", ops::HolovizOp::InputType::POINTS));

geometry_spec.priority_ = 1;

geometry_spec.opacity_ = 0.5;

auto& image_spec =

input_specs.emplace_back(ops::HolovizOp::InputSpec("image_tensor", ops::HolovizOp::InputType::IMAGE));

image_spec.priority_ = 0;

auto visualizer = make_operator<ops::HolovizOp>("holoviz", Arg("tensors", input_specs));

// the source provides two tensors named "point_tensor" and "image_tensor" at the "outputs" port.

add_flow(source, visualizer, {{"outputs", "receivers"}});

The definition of a layer is started by calling one of the layer begin functions viz::BeginImageLayer(), viz::BeginGeometryLayer() or viz::BeginImGuiLayer(). The layer definition ends with viz::EndLayer().

The start of a layer definition is resetting the layer attributes like priority and opacity to their defaults. So for the image layer, there is no need to set the opacity to 1.0 since the default is already 1.0.

namespace viz = holoscan::viz;

viz::Begin();

viz::BeginGeometryLayer();

viz::LayerPriority(1);

viz::LayerOpacity(0.5);

/// details omitted

viz::EndLayer();

viz::BeginImageLayer();

viz::LayerPriority(0);

/// details omitted

viz::EndLayer();

viz::End();

Image Layers

Image data can either be on host or device (GPU), both tensors and video buffers are accepted.

std::vector<ops::HolovizOp::InputSpec> input_specs;

auto& image_spec =

input_specs.emplace_back(ops::HolovizOp::InputSpec("image", ops::HolovizOp::InputType::IMAGE));

auto visualizer = make_operator<ops::HolovizOp>("holoviz", Arg("tensors", input_specs));

// the source provides an image named "image" at the "outputs" port.

add_flow(source, visualizer, {{"output", "receivers"}});

The function viz::BeginImageLayer() starts an image layer. An image layer displays a rectangular 2D image.

The image data is defined by calling viz::ImageCudaDevice(), viz::ImageCudaArray() or viz::ImageHost(). Various input formats are supported, see viz::ImageFormat.

For single channel image formats image colors can be looked up by defining a lookup table with viz::LUT().

viz::BeginImageLayer();

viz::ImageHost(width, height, format, data);

viz::EndLayer();

Supported Image Formats

Supported formats for nvidia::gxf::VideoBuffer.

nvidia::gxf::VideoFormat |

Supported |

Description |

|---|---|---|

| GXF_VIDEO_FORMAT_CUSTOM | - | |

| GXF_VIDEO_FORMAT_YUV420 | - | BT.601 multi planar 4:2:0 YUV |

| GXF_VIDEO_FORMAT_YUV420_ER | - | BT.601 multi planar 4:2:0 YUV ER |

| GXF_VIDEO_FORMAT_YUV420_709 | - | BT.709 multi planar 4:2:0 YUV |

| GXF_VIDEO_FORMAT_YUV420_709_ER | - | BT.709 multi planar 4:2:0 YUV |

| GXF_VIDEO_FORMAT_NV12 | - | BT.601 multi planar 4:2:0 YUV with interleaved UV |

| GXF_VIDEO_FORMAT_NV12_ER | - | BT.601 multi planar 4:2:0 YUV ER with interleaved UV |

| GXF_VIDEO_FORMAT_NV12_709 | - | BT.709 multi planar 4:2:0 YUV with interleaved UV |

| GXF_VIDEO_FORMAT_NV12_709_ER | - | BT.709 multi planar 4:2:0 YUV ER with interleaved UV |

| GXF_VIDEO_FORMAT_RGBA | ✓ | RGBA-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_BGRA | ✓ | BGRA-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_ARGB | ✓ | ARGB-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_ABGR | ✓ | ABGR-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_RGBX | ✓ | RGBX-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_BGRX | ✓ | BGRX-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_XRGB | ✓ | XRGB-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_XBGR | ✓ | XBGR-8-8-8-8 single plane |

| GXF_VIDEO_FORMAT_RGB | ✓ | RGB-8-8-8 single plane |

| GXF_VIDEO_FORMAT_BGR | ✓ | BGR-8-8-8 single plane |

| GXF_VIDEO_FORMAT_R8_G8_B8 | - | RGB - unsigned 8 bit multiplanar |

| GXF_VIDEO_FORMAT_B8_G8_R8 | - | BGR - unsigned 8 bit multiplanar |

| GXF_VIDEO_FORMAT_GRAY | ✓ | 8 bit GRAY scale single plane |

| GXF_VIDEO_FORMAT_GRAY16 | ✓ | 16 bit GRAY scale single plane |

| GXF_VIDEO_FORMAT_GRAY32 | - | 32 bit GRAY scale single plane |

| GXF_VIDEO_FORMAT_GRAY32F | ✓ | float 32 bit GRAY scale single plane |

| GXF_VIDEO_FORMAT_RGB16 | - | RGB-16-16-16 single plane |

| GXF_VIDEO_FORMAT_BGR16 | - | BGR-16-16-16 single plane |

| GXF_VIDEO_FORMAT_RGB32 | - | RGB-32-32-32 single plane |

| GXF_VIDEO_FORMAT_BGR32 | - | BGR-32-32-32 single plane |

| GXF_VIDEO_FORMAT_R16_G16_B16 | - | RGB - signed 16 bit multiplanar |

| GXF_VIDEO_FORMAT_B16_G16_R16 | - | BGR - signed 16 bit multiplanar |

| GXF_VIDEO_FORMAT_R32_G32_B32 | - | RGB - signed 32 bit multiplanar |

| GXF_VIDEO_FORMAT_B32_G32_R32 | - | BGR - signed 32 bit multiplanar |

| GXF_VIDEO_FORMAT_NV24 | - | multi planar 4:4:4 YUV with interleaved UV |

| GXF_VIDEO_FORMAT_NV24_ER | - | multi planar 4:4:4 YUV ER with interleaved UV |

| GXF_VIDEO_FORMAT_R8_G8_B8_D8 | - | RGBD unsigned 8 bit multiplanar |

| GXF_VIDEO_FORMAT_R16_G16_B16_D16 | - | RGBD unsigned 16 bit multiplanar |

| GXF_VIDEO_FORMAT_R32_G32_B32_D32 | - | RGBD unsigned 32 bit multiplanar |

| GXF_VIDEO_FORMAT_RGBD8 | - | RGBD 8 bit unsigned single plane |

| GXF_VIDEO_FORMAT_RGBD16 | - | RGBD 16 bit unsigned single plane |

| GXF_VIDEO_FORMAT_RGBD32 | - | RGBD 32 bit unsigned single plane |

| GXF_VIDEO_FORMAT_D32F | ✓ | Depth 32 bit float single plane |

| GXF_VIDEO_FORMAT_D64F | - | Depth 64 bit float single plane |

| GXF_VIDEO_FORMAT_RAW16_RGGB | - | RGGB-16-16-16-16 single plane |

| GXF_VIDEO_FORMAT_RAW16_BGGR | - | BGGR-16-16-16-16 single plane |

| GXF_VIDEO_FORMAT_RAW16_GRBG | - | GRBG-16-16-16-16 single plane |

| GXF_VIDEO_FORMAT_RAW16_GBRG | - | GBRG-16-16-16-16 single plane |

Image format detection for nvidia::gxf::Tensor. Tensors don’t have image format information attached. The Holoviz operator detects the image format from the tensor configuration.

nvidia::gxf::PrimitiveType |

Channels |

Color format |

Index for color lookup |

|---|---|---|---|

| kUnsigned8 | 1 | 8 bit GRAY scale single plane | ✓ |

| kInt8 | 1 | signed 8 bit GRAY scale single plane | ✓ |

| kUnsigned16 | 1 | 16 bit GRAY scale single plane | ✓ |

| kInt16 | 1 | signed 16 bit GRAY scale single plane | ✓ |

| kUnsigned32 | 1 | - | ✓ |

| kInt32 | 1 | - | ✓ |

| kFloat32 | 1 | float 32 bit GRAY scale single plane | ✓ |

| kUnsigned8 | 3 | RGB-8-8-8 single plane | - |

| kInt8 | 3 | signed RGB-8-8-8 single plane | - |

| kUnsigned8 | 4 | RGBA-8-8-8-8 single plane | - |

| kInt8 | 4 | signed RGBA-8-8-8-8 single plane | - |

| kUnsigned16 | 4 | RGBA-16-16-16-16 single plane | - |

| kInt16 | 4 | signed RGBA-16-16-16-16 single plane | - |

| kFloat32 | 4 | RGBA-16-16-16-16 single plane | - |

See viz::ImageFormat for supported image formats. Additionally viz::ImageComponentMapping() can be used to map the color components of an image to the color components of the output.

Geometry Layers

A geometry layer is used to draw 2d or 3d geometric primitives. 2d primitives are points, lines, line strips, rectangles, ovals or text and are defined with 2d coordinates (x, y). 3d primitives are points, lines, line strips or triangles and are defined with 3d coordinates (x, y, z).

Coordinates start with (0, 0) in the top left and end with (1, 1) in the bottom right for 2d primitives.

See holoviz_geometry.cpp and holoviz_geometry.py for 2d geometric primitives and and holoviz_geometry.py for 3d geometric primitives.

The function viz::BeginGeometryLayer() starts a geometry layer.

See viz::PrimitiveTopology for supported geometry primitive topologies.

There are functions to set attributes for geometric primitives like color (viz::Color()), line width (viz::LineWidth()) and point size (viz::PointSize()).

The code below draws a red rectangle and a green text.

namespace viz = holoscan::viz;

viz::BeginGeometryLayer();

// draw a red rectangle

viz::Color(1.f, 0.f, 0.f, 0.f);

const float data[]{0.1f, 0.1f, 0.9f, 0.9f};

viz::Primitive(viz::PrimitiveTopology::RECTANGLE_LIST, 1, sizeof(data) / sizeof(data[0]), data);

// draw green text

viz::Color(0.f, 1.f, 0.f, 0.f);

viz::Text(0.5f, 0.5f, 0.2f, "Text");

viz::EndLayer();

ImGui Layers

ImGui layers are not supported when using the Holoviz operator.

The Holoviz module supports user interface layers created with Dear ImGui.

Calls to the Dear ImGui API are allowed between viz::BeginImGuiLayer() and viz::EndImGuiLayer() are used to draw to the ImGui layer. The ImGui layer behaves like other layers and is rendered with the layer opacity and priority.

The code below creates a Dear ImGui window with a checkbox used to conditionally show a image layer.

namespace viz = holoscan::viz;

bool show_image_layer = false;

while (!viz::WindowShouldClose()) {

viz::Begin();

viz::BeginImGuiLayer();

ImGui::Begin("Options");

ImGui::Checkbox("Image layer", &show_image_layer);

ImGui::End();

viz::EndLayer();

if (show_image_layer) {

viz::BeginImageLayer();

viz::ImageHost(...);

viz::EndLayer();

}

viz::End();

}

ImGUI is a static library and has no stable API. Therefore the application and Holoviz have to use the same ImGUI version. Therefore the link target holoscan::viz::imgui is exported, make sure to link your app against that target.

Depth Map Layers

A depth map is a single channel 2d array where each element represents a depth value. The data is rendered as a 3d object using points, lines or triangles. The color for the elements can also be specified.

Supported formats for the depth map:

8-bit unsigned normalized format that has a single 8-bit depth component

32-bit signed float format that has a single 32-bit depth component

Supported format for the depth color map:

32-bit unsigned normalized format that has an 8-bit R component in byte 0, an 8-bit G component in byte 1, an 8-bit B component in byte 2, and an 8-bit A component in byte 3

Depth maps are rendered in 3D and support camera movement.

std::vector<ops::HolovizOp::InputSpec> input_specs;

auto& depth_map_spec =

input_specs.emplace_back(ops::HolovizOp::InputSpec("depth_map", ops::HolovizOp::InputType::DEPTH_MAP));

depth_map_spec.depth_map_render_mode_ = ops::HolovizOp::DepthMapRenderMode::TRIANGLES;

auto visualizer = make_operator<ops::HolovizOp>("holoviz",

Arg("tensors", input_specs));

// the source provides an depth map named "depth_map" at the "output" port.

add_flow(source, visualizer, {{"output", "receivers"}});

By default a layer will fill the whole window. When using a view, the layer can be placed freely within the window.

Layers can also be placed in 3D space by specifying a 3D transformation matrix.

For geometry layers there is a default matrix which allows coordinates in the range of [0 … 1] instead of the Vulkan [-1 … 1] range. When specifying a matrix for a geometry layer, this default matrix is overwritten.

When multiple views are specified the layer is drawn multiple times using the specified layer view.

It’s possible to specify a negative term for height, which flips the image. When using a negative height, one should also adjust the y value to point to the lower left corner of the viewport instead of the upper left corner.

See holoviz_views.py.

Use viz::LayerAddView() to add a view to a layer.

When rendering 3d geometry using a geometry layer with 3d primitives or using a depth map layer the camera properties can either be set by the application or interactively changed by the user.

To interactively change the camera, use the mouse:

Orbit (LMB)

Pan (LMB + CTRL | MMB)

Dolly (LMB + SHIFT | RMB | Mouse wheel)

Look Around (LMB + ALT | LMB + CTRL + SHIFT)

Zoom (Mouse wheel + SHIFT)

See holoviz_camera.cpp.

Use viz::SetCamera() to change the camera.

Usually Holoviz opens a normal window on the Linux desktop. In that case the desktop compositor is combining the Holoviz image with all other elements on the desktop. To avoid this extra compositing step, Holoviz can render to a display directly.

Configure a display for exclusive use

SSH into the machine and stop the X server:

sudo systemctl stop display-manager

To resume the display manager, run:

sudo systemctl start display-manager

The display to be used in exclusive mode needs to be disabled in the NVIDIA Settings application (nvidia-settings): open the X Server Display Configuration tab, select the display and under Configuration select Disabled. Press Apply.

Enable exclusive display in Holoviz

Arguments to pass to the Holoviz operator:

auto visualizer = make_operator<ops::HolovizOp>("holoviz",

Arg("use_exclusive_display", true), // required

Arg("display_name", "DP-2"), // optional

Arg("width", 2560), // optional

Arg("height", 1440), // optional

Arg("framerate", 240) // optional

);

Provide the name of the display and desired display mode properties to viz::Init().

If the name is nullptr then the first display is selected.

The name of the display can either be the EDID name as displayed in the NVIDIA Settings, or the output name provided by xrandr or

hwinfo --monitor.

In this example output of xrandr, DP-2 would be an adequate display name to use:

Screen 0: minimum 8 x 8, current 4480 x 1440, maximum 32767 x 32767

DP-0 disconnected (normal left inverted right x axis y axis)

DP-1 disconnected (normal left inverted right x axis y axis)

DP-2 connected primary 2560x1440+1920+0 (normal left inverted right x axis y axis) 600mm x 340mm

2560x1440 59.98 + 239.97* 199.99 144.00 120.00 99.95

1024x768 60.00

800x600 60.32

640x480 59.94

USB-C-0 disconnected (normal left inverted right x axis y axis)

In this example output of hwinfo, `MSI MPG343CQR would be an adequate display name to use:

$ hwinfo --monitor | grep Model

Model: "MSI MPG343CQR"

By default Holoviz is using CUDA stream 0 for all CUDA operations. Using the default stream can affect concurrency of CUDA operations, see stream synchronization behavior for more information.

The operator is using a holoscan::CudaStreamPool instance if provided by the cuda_stream_pool argument.

The stream pool is used to create a CUDA stream used by all Holoviz operations.

const std::shared_ptr<holoscan::CudaStreamPool> cuda_stream_pool =

make_resource<holoscan::CudaStreamPool>("cuda_stream", 0, 0, 0, 1, 5);

auto visualizer =

make_operator<holoscan::ops::HolovizOp>("visualizer",

Arg("cuda_stream_pool") = cuda_stream_pool);

When providing CUDA resources to Holoviz through e.g. viz::ImageCudaDevice() Holoviz is using CUDA operations to use that memory. The CUDA stream used by these operations can be set by calling viz::SetCudaStream(). The stream can be changed at any time.

The rendered frame buffer can be read back. This is useful when when doing offscreen rendering or running Holoviz in a headless environment.

Reading the depth buffer is not supported when using the Holoviz operator.

To read back the color framebuffer set the enable_render_buffer_output parameter to true and provide an allocator to the operator.

The framebuffer is emitted on the render_buffer_output port.

std::shared_ptr<holoscan::ops::HolovizOp> visualizer =

make_operator<ops::HolovizOp>("visualizer",

Arg("enable_render_buffer_output", true),

Arg("allocator") = make_resource<holoscan::UnboundedAllocator>("allocator"),

Arg("cuda_stream_pool") = cuda_stream_pool);

add_flow(visualizer, destination, {{"render_buffer_output", "input"}});

The rendered color or depth buffer can be read back using viz::ReadFramebuffer().

The sRGB color space is supported for both images and the framebuffer. By default Holoviz is using a linear encoded framebuffer.

To switch the framebuffer color format set the framebuffer_srgb parameter to true.

To use sRGB encoded images set the image_format field of the InputSpec structure to a sRGB image format.

Use the viz::SetSurfaceFormat() to set the framebuffer surface format to a sRGB color format.

To use sRGB encoded images set the fmt parameter of viz::ImageCudaDevice(), viz::ImageCudaArray() or viz::ImageHost() to a sRGB image format.

Class documentation

Examples

There are multiple examples both in Python and C++ showing how to use various features of the Holoviz operator.

Concepts

The Holoviz module uses the concept of the immediate mode design pattern for its API, inspired by the Dear ImGui library. The difference to the retained mode, for which most APIs are designed for, is, that there are no objects created and stored by the application. This makes it fast and easy to make visualization changes in a Holoscan application.

Instances

The Holoviz module uses a thread-local instance object to store its internal state. The instance object is created when calling the Holoviz module is first called from a thread. All Holoviz module functions called from that thread use this instance.

When calling into the Holoviz module from other threads other than the thread from which the Holoviz module functions were first called, make sure to call viz::GetCurrent() and viz::SetCurrent() in the respective threads.

There are usage cases where multiple instances are needed, for example, to open multiple windows. Instances can be created by calling viz::Create(). Call viz::SetCurrent() to make the instance current before calling the Holoviz module function to be executed for the window the instance belongs to.

Getting started

The code below creates a window and displays an image.

First the Holoviz module needs to be initialized. This is done by calling viz::Init().

The elements to display are defined in the render loop, termination of the loop is checked with viz::WindowShouldClose().

The definition of the displayed content starts with viz::Begin() and ends with viz::End(). viz::End() starts the rendering and displays the rendered result.

Finally the Holoviz module is shutdown with viz::Shutdown().

#include "holoviz/holoviz.hpp"

namespace viz = holoscan::viz;

viz::Init("Holoviz Example");

while (!viz::WindowShouldClose()) {

viz::Begin();

viz::BeginImageLayer();

viz::ImageHost(width, height, viz::ImageFormat::R8G8B8A8_UNORM, image_data);

viz::EndLayer();

viz::End();

}

viz::Shutdown();

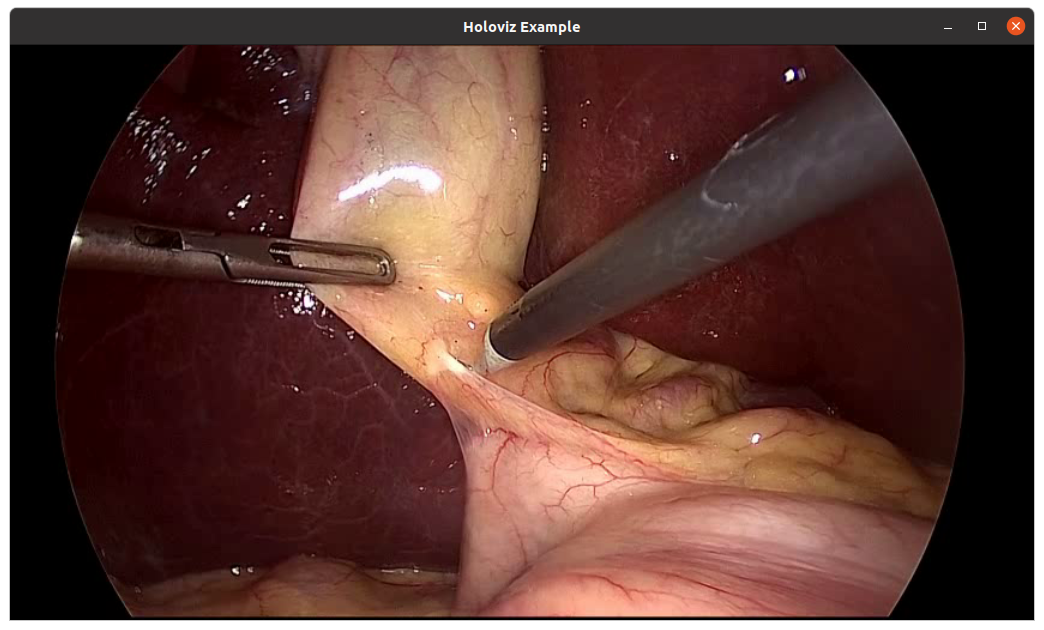

Result:

Fig. 20 Holoviz example app

API

Examples

There are multiple examples showing how to use various features of the Holoviz module.