🚀 NeMo AutoModel#

📣 News and Discussions#

[12/18/2025]FunctionGemma is out! Finetune it with NeMo AutoModel!

[12/15/2025]NVIDIA-Nemotron-3-Nano-30B-A3B is out! Finetune it with NeMo AutoModel!

[11/6/2025]Accelerating Large-Scale Mixture-of-Experts Training in PyTorch

[10/6/2025]Enabling PyTorch Native Pipeline Parallelism for 🤗 Hugging Face Transformer Models

[9/22/2025]Fine-tune Hugging Face Models Instantly with Day-0 Support with NVIDIA NeMo AutoModel

[9/18/2025]🚀 NeMo Framework Now Supports Google Gemma 3n: Efficient Multimodal Fine-tuning Made Simple

Overview#

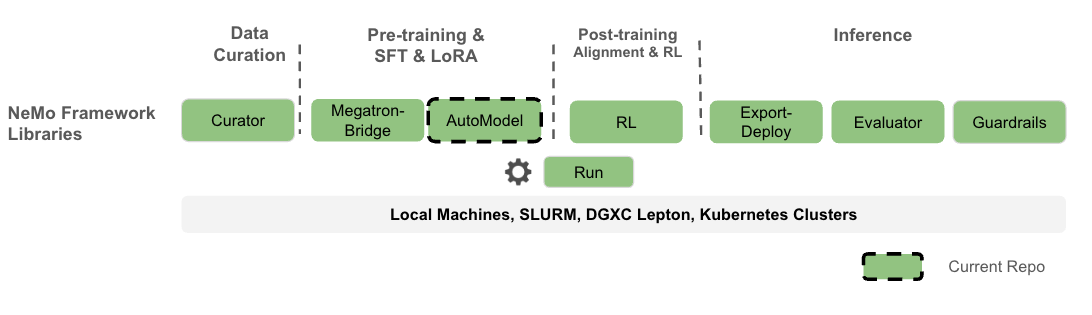

Nemo AutoModel is a Pytorch DTensor‑native SPMD open-source training library under NVIDIA NeMo Framework, designed to streamline and scale training and finetuning for LLMs and VLMs. Designed for flexibility, reproducibility, and scale, NeMo AutoModel enables both small-scale experiments and massive multi-GPU, multi-node deployments for fast experimentation in research and production environments.

What you can expect:

Hackable with a modular design that allows easy integration, customization and quick research prototypes.

Minimal ceremony: YAML‑driven recipes; override any field via CLI.

High performance and flexibility with custom kernels and DTensor support.

Seamless integration with Hugging Face for day-0 model support, ease of use, and wide range of supported models.

Efficient resource management using k8s and Slurm, enabling scalable and flexible deployment across configurations.

Comprehensive documentation that is both detailed and user-friendly, with practical examples.

⚠️ Note: NeMo AutoModel is under active development. New features, improvements, and documentation updates are released regularly. We are working toward a stable release, so expect the interface to solidify over time. Your feedback and contributions are welcome, and we encourage you to follow along as new updates roll out.

Why PyTorch Distributed and SPMD#

One program, any scale: The same training script runs on 1 GPU or 1000+ by changing the mesh.

PyTorch Distributed native: Partition model/optimizer states with

DeviceMesh+ placements (Shard,Replicate).SPMD first: Parallelism is configuration. No model rewrites when scaling up or changing strategy.

Decoupled concerns: Model code stays pure PyTorch; parallel strategy lives in config.

Composability: Mix tensor, sequence, and data parallel by editing placements.

Portability: Fewer bespoke abstractions; easier to reason about failure modes and restarts.

Table of Contents#

TL;DR: SPMD turns “how to parallelize” into a runtime layout choice, not a code fork.

Feature Roadmap#

✅ Available now | 🔜 Coming in 26.02

✅ Advanced Parallelism - PyTorch native FSDP2, TP, CP, and SP for distributed training.

✅ HSDP - Multi-node Hybrid Sharding Data Parallelism based on FSDP2.

✅ Pipeline Support - Torch-native support for pipelining composable with FSDP2 and DTensor (3D Parallelism).

✅ Environment Support - Support for SLURM and interactive training.

✅ Learning Algorithms - SFT (Supervised Fine-Tuning), and PEFT (Parameter Efficient Fine-Tuning).

✅ Pre-training - Support for model pre-training, including DeepSeekV3.

✅ Knowledge Distillation - Support for knowledge distillation with LLMs; VLM support will be added post 25.09.

✅ HuggingFace Integration - Works with dense models (e.g., Qwen, Llama3, etc) and large MoEs (e.g., DSv3).

✅ Sequence Packing - Sequence packing for huge training perf gains.

✅ FP8 and mixed precision - FP8 support with torchao, requires torch.compile-supported models.

✅ DCP - Distributed Checkpoint support with SafeTensors output.

✅ VLM: Support for finetuning VLMs (e.g., Qwen2-VL, Gemma-3-VL). More families to be included in the future.

✅ Extended MoE support - GPT-OSS, Qwen3 (Coder-480B-A35B, etc), Qwen-next.

🔜 Transformers v5 🤗 - Support for transformers v5 🤗 with device-mesh driven parallelism.

🔜 Muon & Dion - Support for Muon and Dion optimizers.

🔜 SonicMoE - Optimized MoE implementation for faster expert computation.

🔜 FP8 MoE - FP8 precision training and inference for MoE models.

🔜 Cudagraph with MoE - CUDA graph support for MoE layers to reduce kernel launch overhead.

🔜 Extended VLM Support - DeepSeek OCR, Qwen3 VL 235B, Kimi-VL, GLM4.5V

🔜 Extended LLM Support - QWENCoder 480B Instruct, MiniMax2.1, and more

🔜 Kubernetes - Multi-node job launch with k8s.

Getting Started#

We recommend using uv for reproducible Python environments.

# Setup environment before running any commands

uv venv

uv sync --frozen --all-extras

uv pip install nemo_automodel # latest release

# or: uv pip install git+https://github.com/NVIDIA-NeMo/Automodel.git

uv run python -c "import nemo_automodel; print('AutoModel ready')"

Run a Recipe#

To run a NeMo AutoModel recipe, you need a recipe script (e.g., LLM, VLM) and a YAML config file (e.g., LLM, VLM):

# Command invocation format:

uv run <recipe_script_path> --config <yaml_config_path>

# LLM example: multi-GPU with FSDP2

uv run torchrun --nproc-per-node=8 examples/llm_finetune/finetune.py --config examples/llm_finetune/llama3_2/llama3_2_1b_hellaswag.yaml

# VLM example: single GPU fine-tuning (Gemma-3-VL) with LoRA

uv run examples/vlm_finetune/finetune.py --config examples/vlm_finetune/gemma3/gemma3_vl_4b_cord_v2_peft.yaml

LLM Pre-training#

LLM Pre-training Single Node#

We provide an example SFT experiment using the Fineweb dataset with a nano-GPT model, ideal for quick experimentation on a single node.

uv run torchrun --nproc-per-node=8 \

examples/llm_pretrain/pretrain.py \

-c examples/llm_pretrain/nanogpt_pretrain.yaml

LLM Supervised Fine-Tuning (SFT)#

We provide an example SFT experiment using the SQuAD dataset.

LLM SFT Single Node#

The default SFT configuration is set to run on a single GPU. To start the experiment:

uv run python3 \

examples/llm_finetune/finetune.py \

-c examples/llm_finetune/llama3_2/llama3_2_1b_squad.yaml

This fine-tunes the Llama3.2-1B model on the SQuAD dataset using a 1 GPU.

To use multiple GPUs on a single node in an interactive environment, you can run the same command

using torchrun and adjust the --proc-per-node argument to the number of needed GPUs.

uv run torchrun --nproc-per-node=8 \

examples/llm_finetune/finetune.py \

-c examples/llm_finetune/llama3_2/llama3_2_1b_squad.yaml

Alternatively, you can use the automodel CLI application to launch the same job, for example:

uv run automodel finetune llm \

--nproc-per-node=8 \

-c examples/llm_finetune/llama3_2/llama3_2_1b_squad.yaml

LLM SFT Multi Node#

You can use the automodel CLI application to launch a job on a SLURM cluster, for example:

# First you need to specify the SLURM section in your YAML config, for example:

cat << EOF > examples/llm_finetune/llama3_2/llama3_2_1b_squad.yaml

slurm:

job_name: llm-finetune # set to the job name you want to use

nodes: 2 # set to the needed number of nodes

ntasks_per_node: 8

time: 00:30:00

account: your_account

partition: gpu

container_image: nvcr.io/nvidia/nemo:25.07

gpus_per_node: 8 # This adds "#SBATCH --gpus-per-node=8" to the script

# Optional: Add extra mount points if needed

extra_mounts:

- /lustre:/lustre

# Optional: Specify custom HF_HOME location (will auto-create if not specified)

hf_home: /path/to/your/HF_HOME

# Optional : Specify custom env vars

# env_vars:

# ENV_VAR: value

# Optional: Specify custom job directory (defaults to cwd/slurm_jobs)

# job_dir: /path/to/slurm/jobs

EOF

# using the updated YAML you can launch the job.

uv run automodel finetune llm \

-c examples/llm_finetune/llama3_2/llama3_2_1b_squad.yaml

LLM Parameter-Efficient Fine-Tuning (PEFT)#

We provide a PEFT example using the HellaSwag dataset.

LLM PEFT Single Node#

# Memory‑efficient SFT with LoRA

uv run examples/llm_finetune/finetune.py \

--config examples/llm_finetune/llama3_2/llama3_2_1b_hellaswag_peft.yaml

# You can always overwrite parameters by appending them to the command, for example,

# if you want to increase the micro-batch size you can do

uv run examples/llm_finetune/finetune.py \

--config examples/llm_finetune/llama3_2/llama3_2_1b_hellaswag_peft.yaml \

--step_scheduler.local_batch_size 16

# The above command will modify the `local_batch_size` variable to have value 16 in the

# section `step_scheduler` of the yaml file.

[!NOTE] Launching a multi-node PEFT example requires only adding a

slurmsection to your config, similarly to the SFT case.

VLM Supervised Fine-Tuning (SFT)#

We provide a VLM SFT example using Qwen2.5‑VL for end‑to‑end fine‑tuning on image‑text data.

VLM SFT Single Node#

# Qwen2.5‑VL on a 8 GPUs

uv run torchrun --nproc-per-node=8 \

examples/vlm_finetune/finetune.py \

--config examples/vlm_finetune/qwen2_5/qwen2_5_vl_3b_rdr.yaml

VLM Parameter-Efficient Fine-Tuning (PEFT)#

We provide a VLM PEFT (LoRA) example for memory‑efficient adaptation with Gemma3 VLM.

VLM PEFT Single Node#

# Qwen2.5‑VL on a 8 GPUs

uv run torchrun --nproc-per-node=8 \

examples/vlm_finetune/finetune.py \

--config examples/vlm_finetune/gemma3/gemma3_vl_4b_medpix_peft.yaml

Supported Models#

NeMo AutoModel provides native support for a wide range of models available on the Hugging Face Hub, enabling efficient fine-tuning for various domains. Below is a small sample of ready‑to‑use families (train as‑is or swap any compatible 🤗 causal LM), you can specify nearly any LLM/VLM model available on 🤗 hub:

Domain |

Model Family |

Model ID |

Recipes |

|---|---|---|---|

LLM |

GPT-OSS |

||

LLM |

DeepSeek |

||

LLM |

Moonlight |

||

LLM |

LLaMA |

||

LLM |

Mistral |

||

LLM |

Qwen |

||

LLM |

Gemma |

||

LLM |

Phi |

||

LLM |

Seed |

||

LLM |

Baichuan |

||

VLM |

Gemma |

||

Performance#

NeMo AutoModel achieves great training performance on NVIDIA GPUs. Below are highlights from our benchmark results:

Model |

#GPUs |

Seq Length |

Model TFLOPs/sec/GPU |

Tokens/sec/GPU |

Kernel Optimizations |

|---|---|---|---|---|---|

DeepSeek V3 671B |

256 |

4096 |

250 |

1,002 |

TE + DeepEP |

GPT-OSS 20B |

8 |

4096 |

279 |

13,058 |

TE + DeepEP + FlexAttn |

Qwen3 MoE 30B |

8 |

4096 |

212 |

11,842 |

TE + DeepEP |

For complete benchmark results including configuration details, see the Performance Summary.

🔌 Interoperability#

NeMo RL: Use AutoModel checkpoints directly as starting points for DPO/RM/GRPO pipelines.

Hugging Face: Train any LLM/VLM from 🤗 without format conversion.

Megatron Bridge: Optional conversions to/from Megatron formats for specific workflows.

🗂️ Project Structure#

NeMo-Automodel/

├── examples

│ ├── llm_finetune # LLM finetune recipes

│ ├── llm_kd # LLM knowledge-distillation recipes

│ ├── llm_pretrain # LLM pretrain recipes

│ ├── vlm_finetune # VLM finetune recipes

│ └── vlm_generate # VLM generate recipes

├── nemo_automodel

│ ├── _cli

│ │ └── app.py # the `automodel` CLI job launcher

│ ├── components # Core library

│ │ ├── _peft # PEFT implementations (LoRA)

│ │ ├── _transformers # HF model integrations

│ │ ├── checkpoint # Distributed checkpointing

│ │ ├── config

│ │ ├── datasets # LLM (HellaSwag, etc.) & VLM datasets

│ │ ├── distributed # FSDP2, Megatron FSDP, Pipelining, etc.

│ │ ├── launcher # The job launcher component (SLURM)

│ │ ├── loggers # loggers

│ │ ├── loss # Optimized loss functions

│ │ ├── models # User-defined model examples

│ │ ├── moe # Optimized kernels for MoE models

│ │ ├── optim # Optimizer/LR scheduler components

│ │ ├── quantization # FP8

│ │ ├── training # Train utils

│ │ └── utils # Misc utils

│ ├── recipes

│ │ ├── llm # Main LLM train loop

│ │ └── vlm # Main VLM train loop

│ └── shared

└── tests/ # Comprehensive test suite

Citation#

If you use NeMo AutoModel in your research, please cite it using the following BibTeX entry:

@misc{nemo-automodel,

title = {NeMo AutoModel: DTensor‑native SPMD library for scalable and efficient training},

howpublished = {\url{https://github.com/NVIDIA-NeMo/Automodel}},

year = {2025},

note = {GitHub repository},

}

🤝 Contributing#

We welcome contributions! Please see our Contributing Guide for details.

📄 License#

NVIDIA NeMo AutoModel is licensed under the Apache License 2.0.