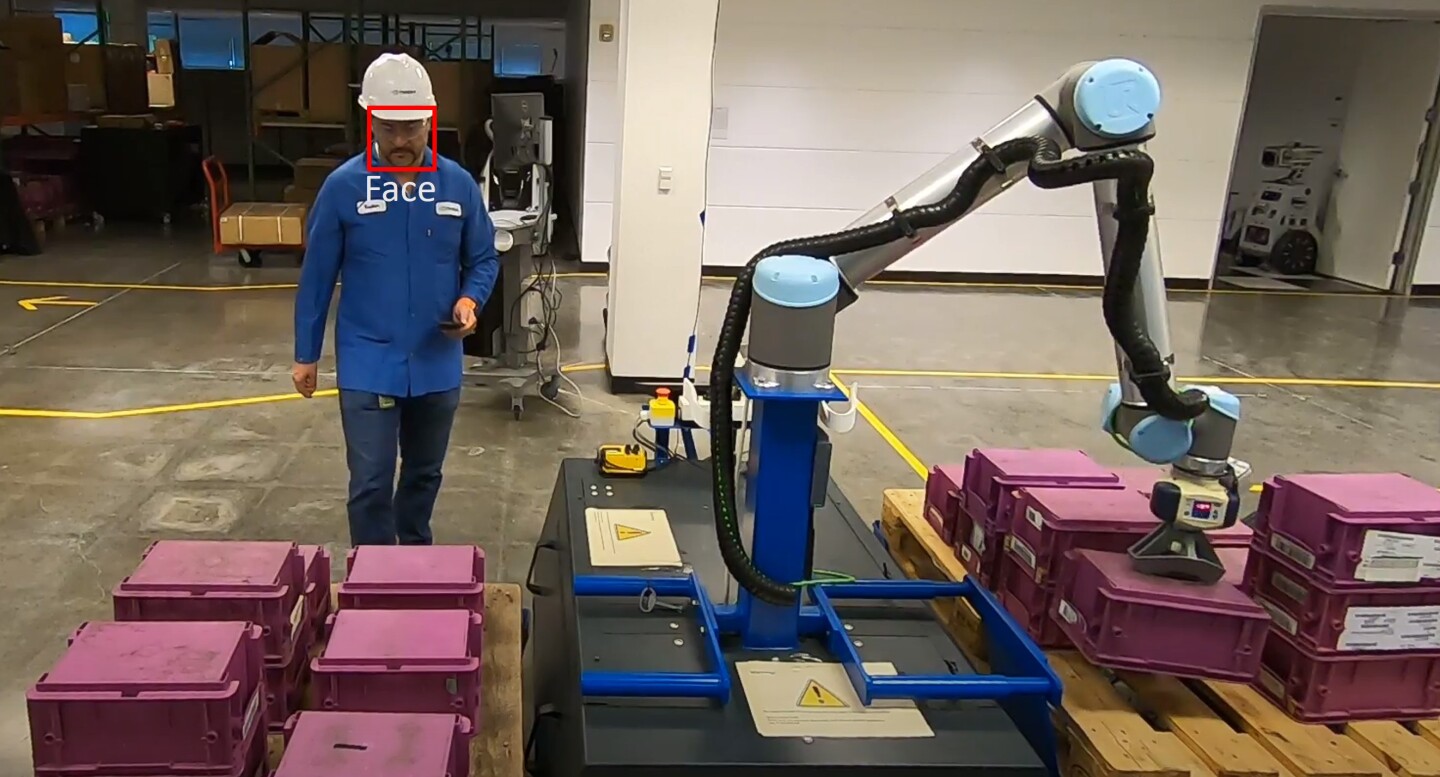

FaceDetect

The FaceDetect model detects one or more faces in a given image or video. Compared to the FaceirNet model, this model gives better results with RGB images and smaller faces.

The model is based on the NVIDIA DetectNet_v2 detector with ResNet18 as a feature extractor. This architecture, also known as GridBox object detection, uses bounding-box regression on a uniform grid on the input image. The gridbox system divides an input image into a grid that predicts four normalized bounding-box parameters (xc, yc, w, h) and a confidence value per output class.

The raw normalized bounding-box and confidence detections need to be post-processed by a clustering algorithm such as DBSCAN or NMS to produce the final bounding-box coordinates and category labels.

Face Detection use case

The training algorithm optimizes the network to minimize the localization and confidence loss for the objects. This model was trained using the DetectNet_v2 training app in TAO Toolkit v3.0. The training is carried out in two phases. In the first phase, the network is trained with regularization to facilitate pruning. Following the first phase, the network is pruned, removing channels with kernel norms below the pruning threshold. In the second phase the pruned network is retrained. Regularization is not included during the second phase.

The primary use case for this model is to detect faces from an RGB camera. The model can be used to detect faces from photos and videos using appropriate video or image decoding and pre-processing.

The datasheet for the model is captured in it’s model card hosted at NGC.