Offline Data Augmentation

Offline Data Augmentation is currently only designed for object-detection datasets using KITTI or COCO format.

Training a deep neural network can be a daunting task, and the most important component of training a model is the data. Acquiring curated and annotated datasets is often a manual process involving thousands of person-hours of painstaking labelling. Even if the data is carfully collected and planned, it is very difficult to estimate all the corner cases that a network can have, and repeating the process of collecting missing data and annotating it is very expensive and has long turnover times.

Online augmentation with the training data loader is a good way to increase variation in the dataset. However, the augmented data is generated randomly based on the distribution the data loader follows when sampling the data. To achieve a high level of accuracy, the model may need to be trained for a long time.

To circumvent these limitations, generate a dataset with the required augmentations, and give control to the user, the TAO Data Services provides an Offline Data Augmentation service. Offline augmentation can dramatically increase the size of the dataset when collecting and labeling data is expensive or not possible. The augmentation service provides several custom GPU-accelerated augmentation routines:

Spatial augmentation

Color-space augmentation

Image blur

Spatial Augmentation

Spatial augmentation comprises routines where data is augmented in space. The following spatial augmentation operations are supported:

Rotate (optionally, with AI-assisted bounding-box refinement)

Resize

Translate

Shear

Flip

Color-Space Augmentation

Color-space augmentation comprises routines where the image data is augmented in the color space. The following operators are supported:

Hue Rotation

Brightness offset

Contrast shift

Image Blur

Along with the above augmentation operations, the augmentation service also supports image blurring or sharpening, which is described further in FilterKernel config.

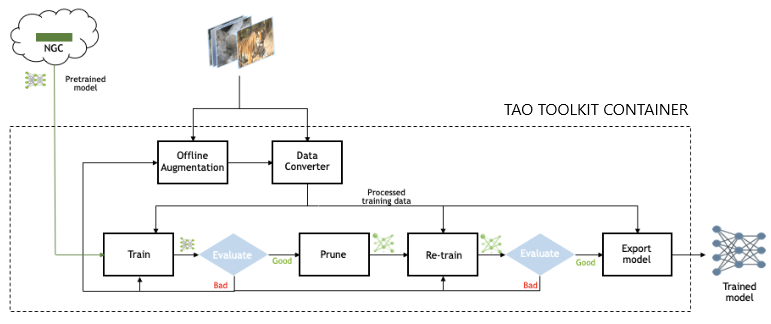

The spatial augmentation routines are applied to the images as well as the groundtruth labels, while the color augmentation routines and channel-wise blur operator are applied only to images. The sample workflow is as follows:

The configuration YAML file for augmentation experiements contains four major components, as well as a few global parameters for launching multi-GPU job and saving the job log.

Spatial-augmentation config

Color-augmentation config

Blur config

Data config (output image dimension, dataset type, etc.)

| Parameter | Datatype | Description |

| spatial_aug | Dict config | The configuration of the spatial-augmentation operators |

| color_aug | Dict config | The configuration of the color-augmentation operators |

| blur_aug | Dict config | The configuration of the gaussian-blur operator to be applied on the image. The blur is computed channel-wise and then concatenated based on the number of image channels. |

| data | Dict config | The configuration of the input data type and input/output dataset location |

| random_seed | int32 | The seed for the randomized augmentation operations |

Spatial-Augmentation Config

The spatial-augmentation config contains parameters to configure the spatial augmentation routines.

| Parameter | Datatype | Description | Supported Values |

| rotation | Dict config | Configures the rotate-augmentation operator. Defining this value activates rotation. |

|

| flip | Dict config | Configures the flip-augmentation operator. Defining this value activates flip along the horizontal and/or vertical axes. |

|

| translation | Dict config | Configures the translation-augmentation operator. Defining this values activates translating the images across the x and/or y axes. |

|

| shear | Dict config | Configures the shear augmentation operator. Defining this value activates a shear to the images across the x and/or y axes. |

|

Rotation Config

The rotation operation rotates the image at an angle. The transformation matrix for shear operation is computed as follows:

[x_new, y_new, 1] = [x, y, 1] * [[cos(angle) sin(angle) zero]

[-sin(angle) cos(angle) zero]

[x_t y_t one]]

Where x_t, y_t are defined as

x_t = height * sin(angle) / 2.0 - width * cos(angle) / 2.0 + width / 2.0

y_t = -1 * height * cos(angle) / 2.0 + height / 2.0 - width * sin_(angle) / 2.0

Here height = height of the output image, width = width of the output image.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| angle | float | The angle of the rotation to be applied to the image and the coordinates | +/- 0 - 360 (degrees) +/- 0 - 2ℼ (radians) |

| units | string | The units in which the angle parameter is measured |

“degrees”, “radians” |

| refine_box | Dict config | Parameters for enabling AI-assisted bounding box refinement |

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| gt_cache | string | The path to the groundtruth mask labels or the pseudo mask labels generated by the TAO Auto Labeling service | |

| enabled | boolea | A flag specifying whether to enable AI-assisted bounding box refinement | True, False |

Shear Config

The shear operation introduces a slant to the object along the x or y dimension. The transformation matrix for shear operation is computed as follows:

[x_new, y_new, 1] = [x, y, 1] * [[1.0 shear_ratio_y, 0],

[shear_ratio_x, 1.0, 0],

[x_t, y_t, 1.0]]

X_t = -height * shear_ratio_x / 2.

Y_t = -width * shear_ratio_y / 2.

Here height = height of the output image, width = width of the output image.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| shear_ratio_x | float32 | The amount of horizontal shift per y row. | |

| shear_ratio_y | float32 | The amount of vertical shift per x column. |

Flip Config

The operator flips an image and the bounding box coordinates along the horizontal and vertical axis.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| flip_horizontal | bool | The flag to enable flipping an image horizontally | true, false |

| flip_vertical | bool | The flag to enable flipping an image vertically | true, false |

Translation Config

The operator translates the image and polygon coordinates along the x and/or y axis.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| translate_x | int | The number of pixels to translate the image along the x axis | 0 - image_width |

| translate_y | int | The number of pixels to translate the image along the y axis | 0 - image_height |

Color Augmentation Config

The color augmentation config contains parameters to configure the color space augmentation routines, including the following:

Parameter |

Datatype |

Description |

|---|---|---|

| hue | Dict config | This augmentation operator applies hue rotation augmentation. |

| contrast | Dict config | This augmentation operator applies contrast scaling. |

| brightness | Dict config | This configures the brightness shift augmentation operator. |

| saturation | Dict config | This augmentation operator applies color saturation augmentation. |

Hue Config

This augmentation operator applies color space manipulation by converting the RGB image to HSV, performing hue rotation, and then returning with the corresponding RGB image.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| hue_rotation_angle | float32 | Hue rotation in degrees (scalar or vector). A value of 0.0 (modulo 360) leaves the hue unchanged. | 0 - 360 (the angles are computed as angle % 360) |

Saturation Config

This augmentation operator applies color space manipulation by converting the RGB image to HSV, performing saturation shift, and then returning with the corresponding RGB image.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| saturation_shift | float32 | Saturation shift multiplier. A value of 1.0 leaves the saturation unchanged. A value of 0 removes all saturation from the image and makes all channels equal in value. | 0.0 - 1.0 |

Brightness Config

This augmentation operator applies a channel-wise brightness shift.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| offset | float32 | Offset value per color channel | 0 - 255 |

Contrast Config

This augmentation operator applies contrast scaling across a center point to an image.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| contrast | float32 | The contrast scale value. A value of 0 leaves the contrast unchanged. | 0 - 1.0 |

| center | float32 | The center value for the image. In this case, the images are scaled between 0-255 (8 bit images), so setting a value of 127.5 is common. | 0.0 - 1.0 |

KernelFilter Config

The KernelFilter config applies the Gaussian blur operator to an image. A Gaussian kernel is formulated based on the following parameters, then a per-channel 2D convolution is performed between this image and kernel.

Parameter |

Datatype |

Description |

Supported Values |

|---|---|---|---|

| size | int | The size of the kernel for convolution | >0 |

| std | float | The standard deviation of the Gaussian filter for blurring | >0.0 |

Data Config

The data config parameters configure the input and output data dimension, type, and location.

Parameter |

Datatype |

Description |

|---|---|---|

| dataset_type | string | The dataset type. Only “coco” and “kitti” values are supported |

| output_image_width | Optional[int] | The output image width. If this value is not specified, the input image width is preserved. |

| output_image_height | Optional[int] | The output image height. If this value is not specified, the input image height is preserved. |

| image_dir | string | The input image directory |

| ann_path | string | The annotation path (i.e. the label directory for the KITTI dataset and annotation JSON for COCO) |

| output_dataset | string | The directory to save the augmented images and labels |

| batch_size | int | The batch size of DALI dataloader |

| include_masks | boolean | A flag specifying whether to load segmentation annotation when reading a COCO JSON file |

The offline augmentation service has a simple command line interface, which is defined as follows:

tao dataset augmentation generate [-h] -e <experiment spec file>

--gpus <num_gpus>

-e, --experiment_spec_file: The path to the YAML spec file

Optional Arguments

--gpus: The number of GPUs to use. The default value is 1.-h, --help: Show this help message and exit.

Once the dataset is generated, you can use the code:dataset-convert tool to convert it

to TFRecords so that it can be ingested by the train sub-task. Details about converting the

data to TFRecords are described in the Data Input for Object Detection section, and training a model with this dataset is described in

the Data Annotation Format section.

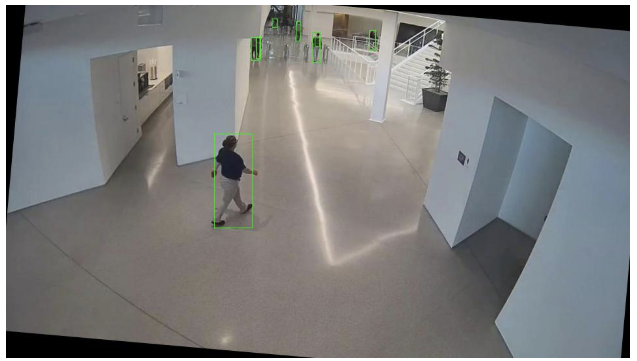

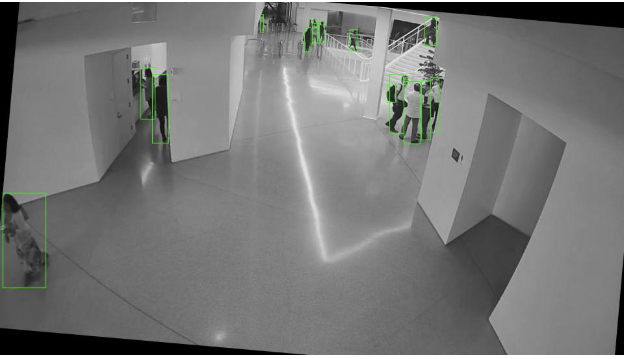

Below are some sample rendered augmented images:

Input image rotated by 5 degrees

Image rotated by 5 degrees, with hue rotation by 25 degrees and a saturation shift of 0.0