Overview

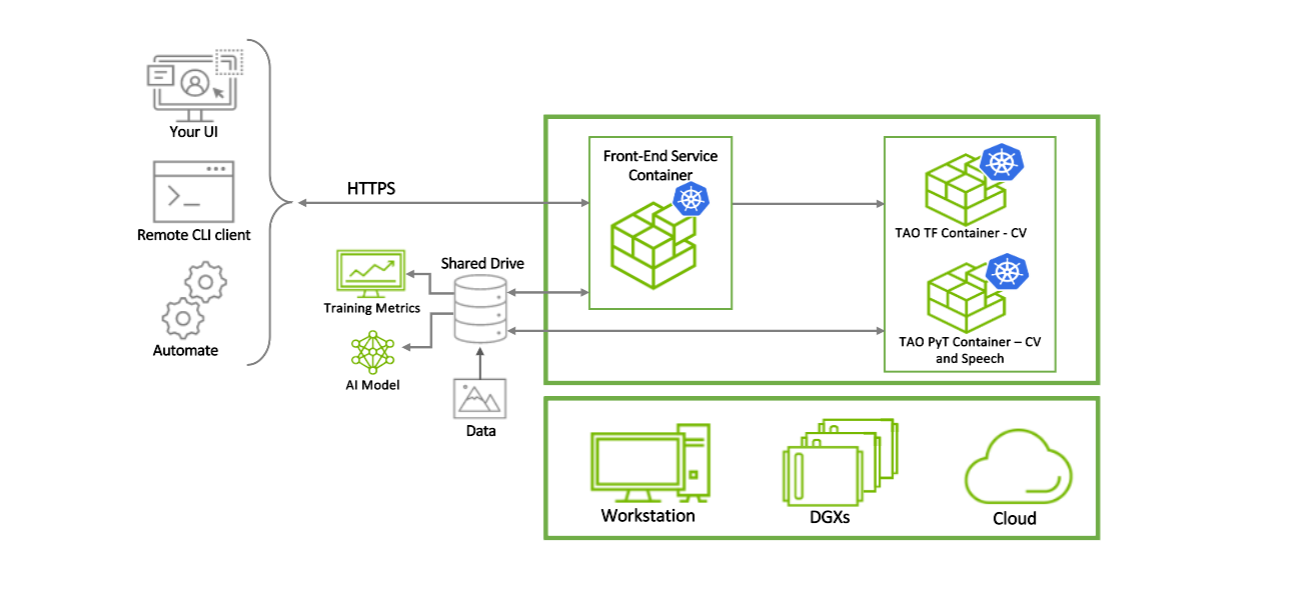

Nvidia Transfer Learning (NVTL) API is a cloud service that enables building end-to-end AI models using custom datasets. In addition to exposing NVTL Toolkit functionality through APIs, the service also enables a client to build end-to-end workflows - creating datasets, models, obtaining pretrained models from NGC, obtaining default specs, training, evaluating, optimizing, and exporting models for deployment on edge. NVTL jobs run on GPUs within a multi-node cloud cluster.

You can develop client applications on top of the provided API, or use the provided NVTL remote client CLI.

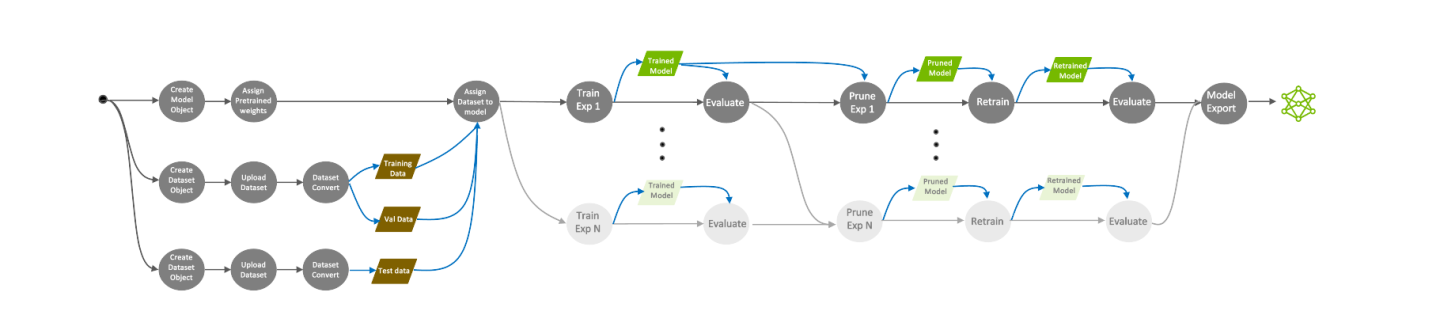

The API allows you to create datasets and upload their data to the service or pull data from a public cloud link directly to the service without uploading. You then create models and can create experiments by linking models to train, eval, and inference datasets.

Actions such as train, evaluate, prune, retrain, export, and inference can be spawned using API calls. For each action, you can request the action’s default parameters, update said parameters to your liking, then pass them while running the action. The specs are in the JSON format.

The service exposes a Job API endpoint that allows you to cancel, download, and monitor jobs. Job API endpoints also provide useful information such as epoch number, accuracy, loss values, and ETA.

Further, the service demarcates different users inside a cluster and can protect read-write access.

The NVTL remote client is a Command Line Interface (CLI) that uses API calls to expose an interface similar to the TAO Launcher CLI.

The use cases for REST API are 3rd party web-UI cloud services, and the use cases for the remote client include training farms, internal model production system, and research projects.