TAO Toolkit Clearml Integration

The following networks in TAO Toolkit interface with ClearML, allowing you to continuously iterate, visualize and track multiple training experiments, and compile meaningful insights into a training use case.

DetectNet-v2

FasterRCNN

Image Classification - TF2

RetinaNet

YOLOv4/YOLOv4-Tiny

YOLOv3

SSD

DSSD

EfficientDet - TF2

MaskRCNN

UNet

In TAO Toolkit 4.0.1, the ClearML visualization suite synchronizes with the data rendered in TensorBoard. Therefore, to see rendered data over the ClearML server, you need to enable TensorBoard visualization. The integration also includes the ability to send you alerts via slack or Email for training runs that have failed.

Enabling MLOPS integration does not require you to install tensorboard.

These are the broad steps involved with setting up ClearML for TAO Toolkit:

Setting up a ClearML account

Acquiring a ClearML credentials

Logging into the ClearML client

Setting the configurable data for the ClearML experiment

Setting up a ClearML Account

Sign up for a free account at the ClearML website and then log in to your ClearML account.

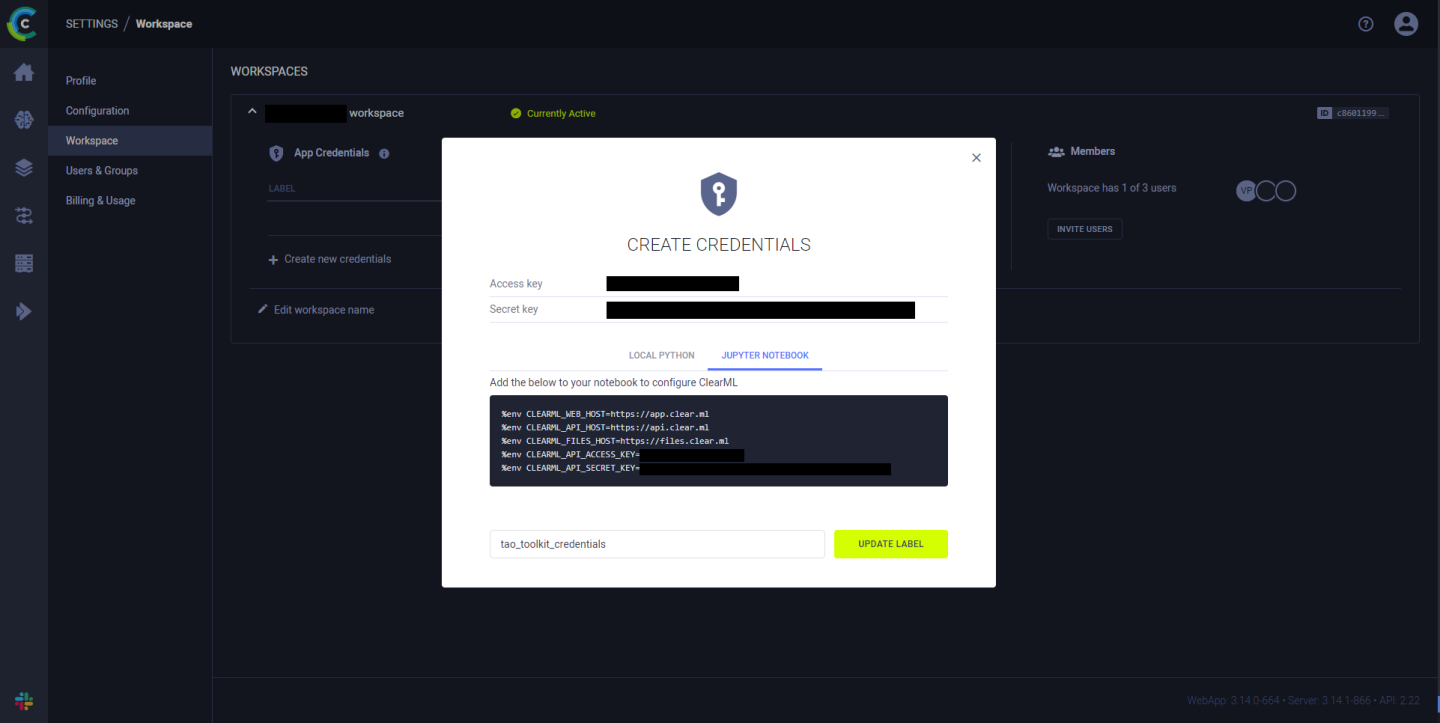

Acquiring a ClearML API Credentials

Once you have logged in to your ClearML account, generate new credentials by navigating to the settings pane in the top-right corner of this window and clicking on Generate New Credentials.

NVIDIA recommends getting the credentials in the form of environment variables for maximum

ease of use. You can get these variables by clicking on the Jupyter Notebook tab and

copying the env variables.

Jupyter notebook tab from the credentials under Settings/Workspace

Install clearml Library

Install the clearml library on your local machine in a Python3 environment.

python3 -m pip install clearml

Log in to the ClearML Client in the TAO Toolkit Container

To communicate the data from the local compute unit and render data on the ClearML server dashboard, the ClearML client in the TAO Toolkit container must be logged in and synchronized with your profile. To have the clearml client in the container log in, set the following environment variables with the data you received when setting up your ClearML account.

%env CLEARML_WEB_HOST=https://app.clear.ml

%env CLEARML_API_HOST=https://api.clear.ml

%env CLEARML_FILES_HOST=https://files.clear.ml

%env CLEARML_API_ACCESS_KEY=<API_ACCESS_KEY>

%env CLEARML_API_SECRET_KEY=<API_SECRET_KEY>

To set the environment variable via the TAO Toolkit launcher, use the sample JSON file below for

reference and replace the value field under the Envs element of the

~/.tao_mounts.json file.

{

"Mounts": [

{

"source": "/path/to/your/data",

"destination": "/workspace/tao-experiments/data"

},

{

"source": "/path/to/your/local/results",

"destination": "/workspace/tao-experiments/results"

},

{

"source": "/path/to/config/files",

"destination": "/workspace/tao-experiments/specs"

}

],

"Envs": [

{

"variable": "CLEARML_WEB_HOST",

"value": "https://app.clear.ml"

},

{

"variable": "CLEARML_API_HOST",

"value": "https://api.clear.ml"

},

{

"variable": "CLEARML_FILES_HOST",

"value": "https://files.clear.ml"

},

{

"variable": "CLEARML_API_ACCESS_KEY",

"value": "<API_ACCESS_KEY>"

},

{

"variable": "CLEARML_API_SECRET_KEY",

"value": "<API_SECRET_KEY>"

}

],

"DockerOptions": {

"shm_size": "16G",

"ulimits": {

"memlock": -1,

"stack": 67108864

},

"user": "1000:1000",

"ports": {

"8888": 8888

}

}

}

When running the networks from TAO Toolkit containers directly, use the -e flag

with the docker command. For example, to run detectnet_v2 with ClearML directly

via the container, use the following code.

docker run -it --rm --gpus all \

-v /path/in/host:/path/in/docker \

-e CLEARML_WEB_HOST="https://app.clear.ml" \

-e CLEARML_API_HOST="https://api.clear.ml" \

-e CLEARML_FILES_HOST="https://files.clear.ml" \

-e CLEARML_API_ACCESS_KEY="<API_ACCESS_KEY>" \

-e CLEARML_API_SECRET_KEY="<API_SECRET_KEY>" \

nvcr.io/nvidia/tao/tao-toolkit:5.0.0-tf1.15.5 \

detectnet_v2 train -e /path/to/experiment/spec.txt \

-r /path/to/results/dir \

-k $KEY --gpus 4

TAO Toolkit provides a few options to configure the clearml client:

project: A string containing the name of the project the experiment data gets uploaded totags: A list of strings that can be used to tag the experimenttask: The name of the experiment. In order to maintain a unique name per run, TAO Toolkit appends to the name string a timestamp indicating when the experiment run was created.

Depending on the schema the network follows, the spec file snippet to be added to the network may vary slightly.

For DetectNet_v2, UNet, FasterRCNN, YOLOv3/YOLOv4/YOLOv4-Tiny, RetinaNet, and SSD/DSSD,

please add the following snippet under the training_config config element of the network.

visualizer{

enabled: true

clearml_config{

project: "name_of_project"

tags: "training"

tags: "tao_toolkit"

task: "training_experiment_name"

}

}

For MaskRCNN, add the following snippet in the network’s training configuration

clearml_config{

project: "name_of_project"

tags: "training"

tags: "tao_toolkit"

task: "training_experiment_name"

}

For EfficientDet-TF2 and Classification-TF2, add the following snippet under the train config element

in the train.yaml file.

clearml:

task: "name_of_the_experiment"

project: "name_of_the_project"

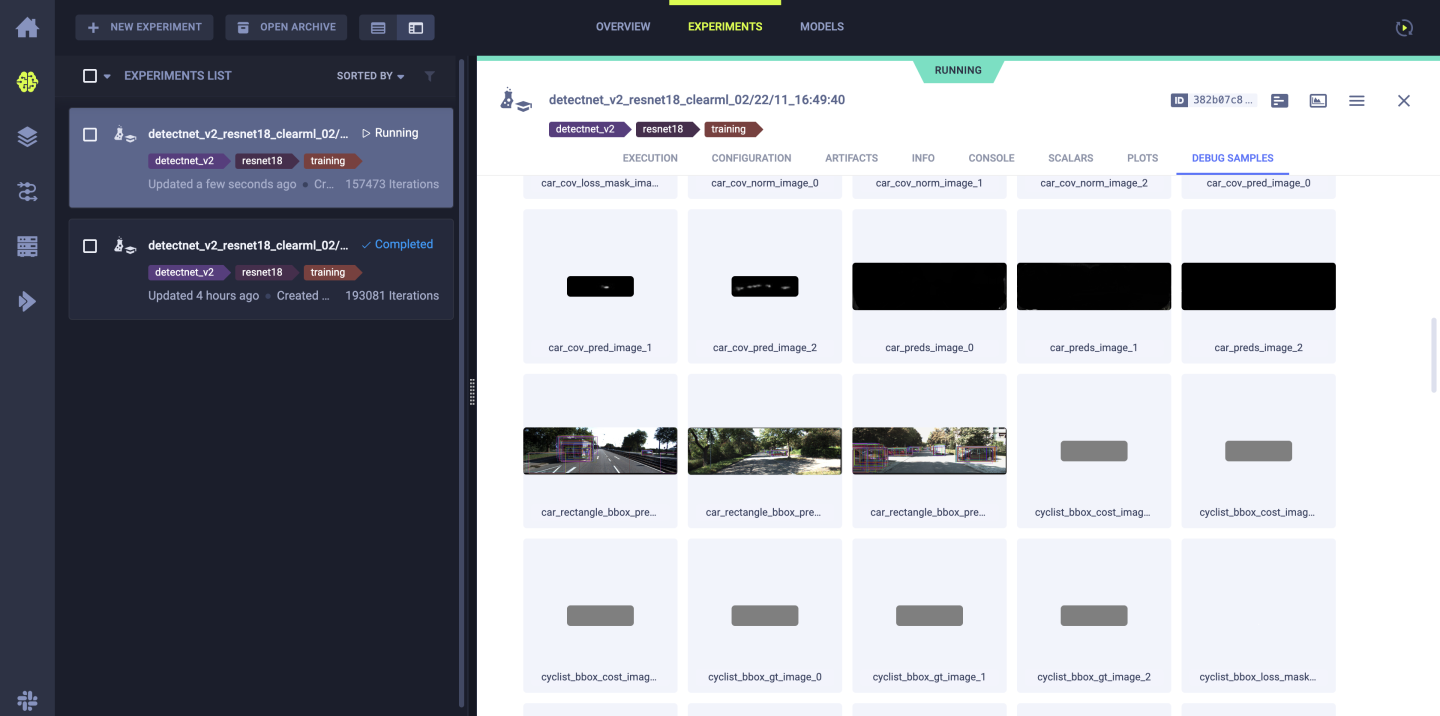

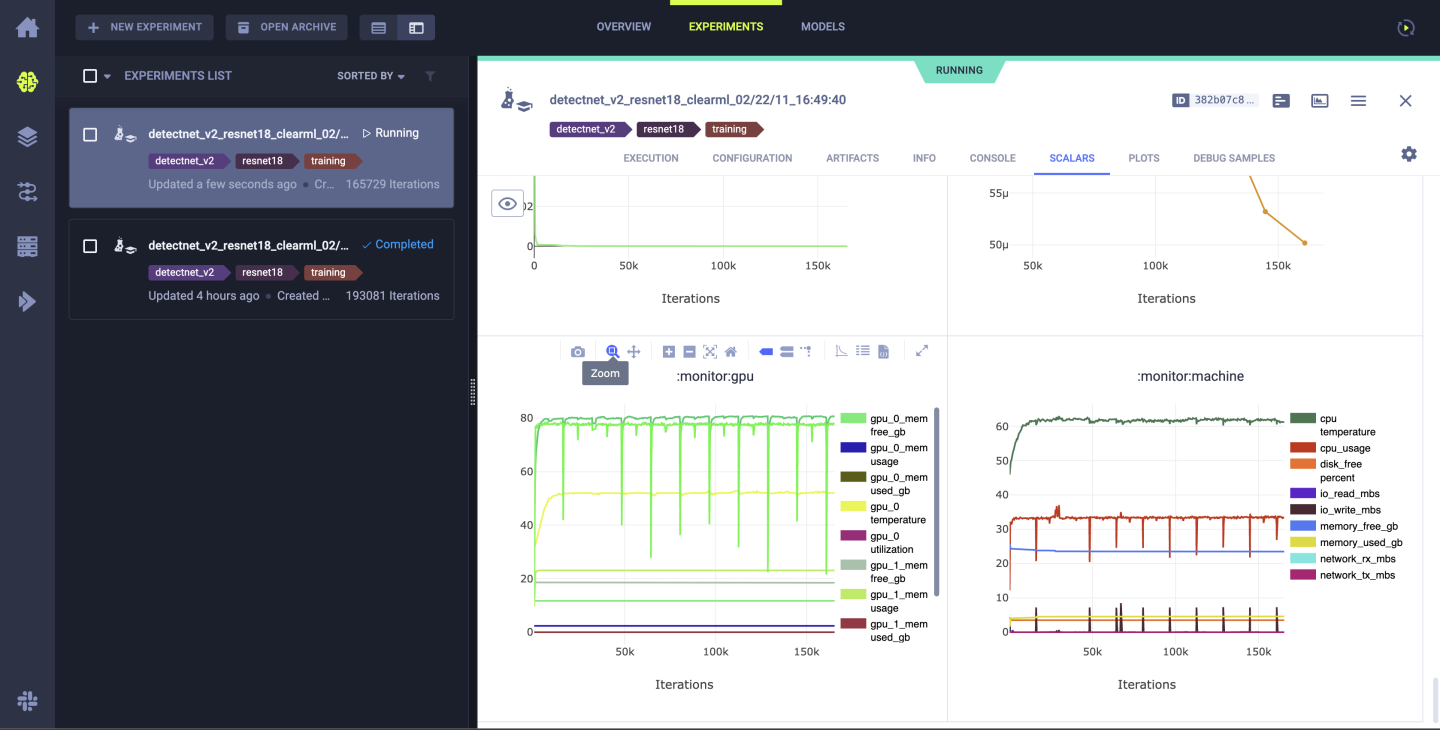

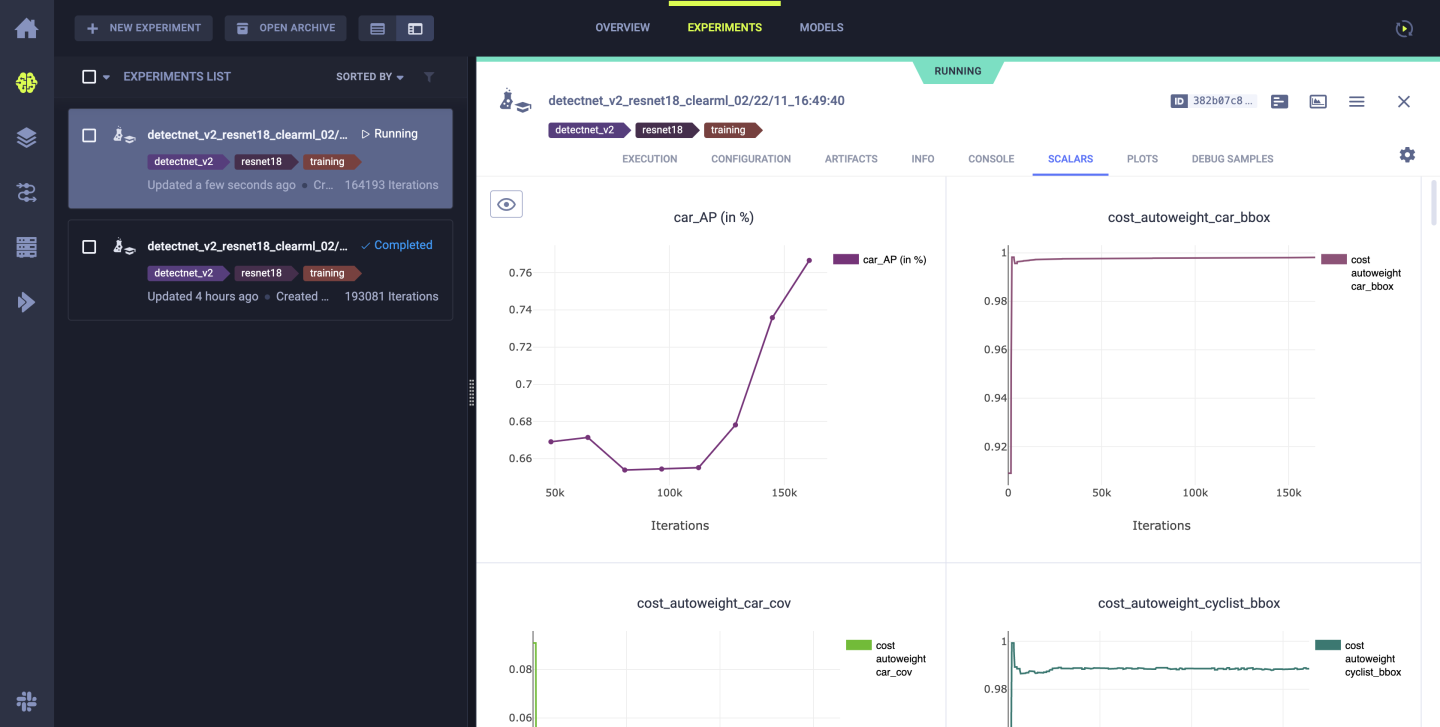

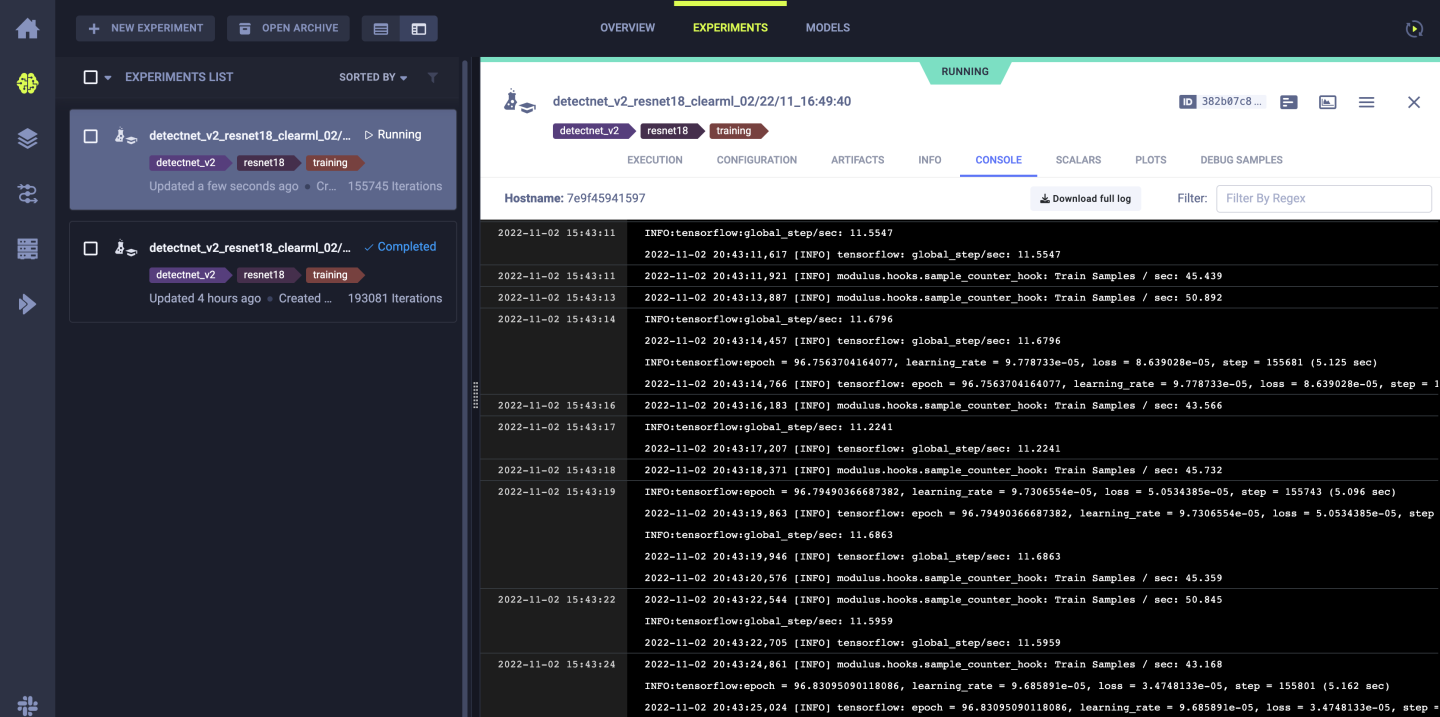

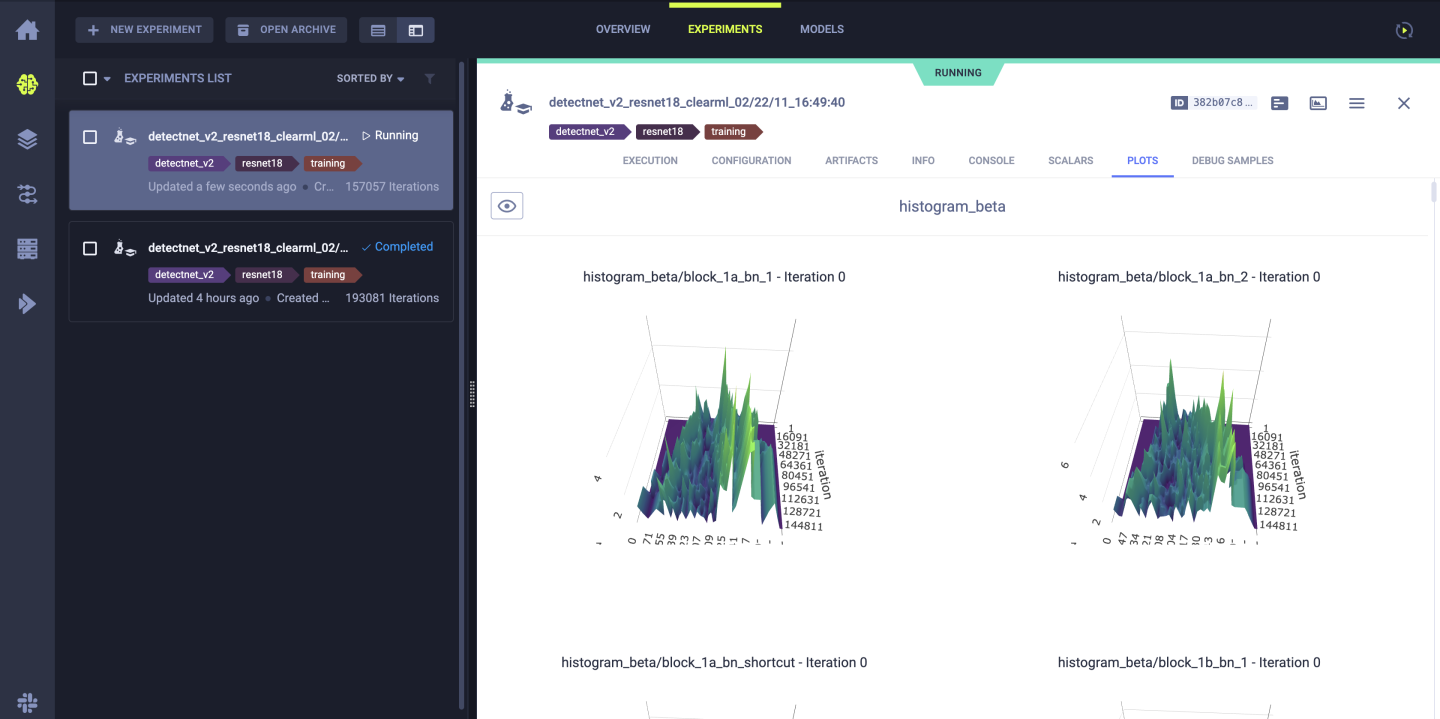

The following are sample images from a successful visualization run for DetectNet_v2.

Image showing intermediate inference images with bounding boxes before clustering using DBScan or NMS

Image showing system utilization plots.

Metrics plotted during training

Streaming logs from the local machine running the training.

Weight histograms of the trained model.