ActionRecognitionNet

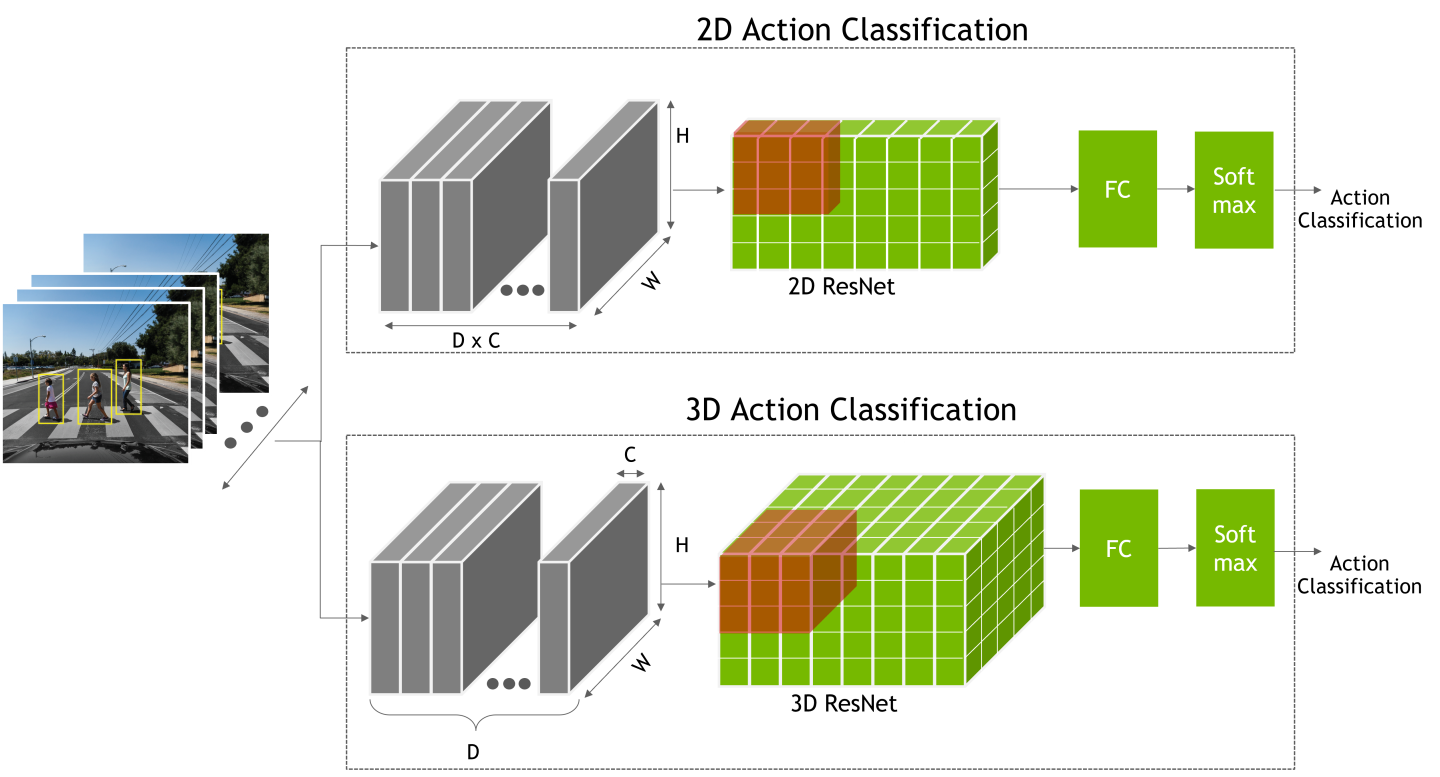

ActionRecognitionNet takes a sequence of images as network input and predicts the people’s action in those images. TAO Toolkit provides the network backbones in 2D/3D with the following input options: RGB-only input, optical flow (OF) only input, and two-stream joint input (RGB+OF).

Action Recognition Net Architecture

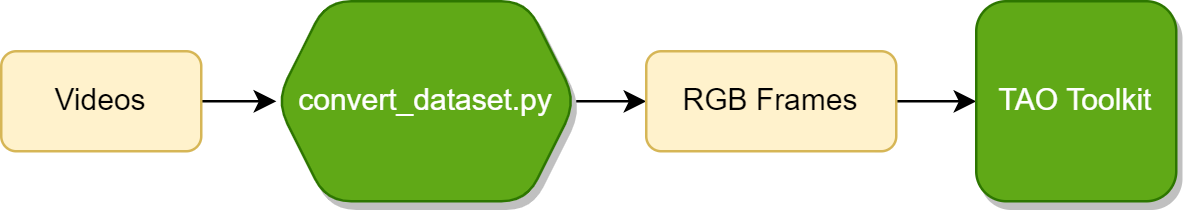

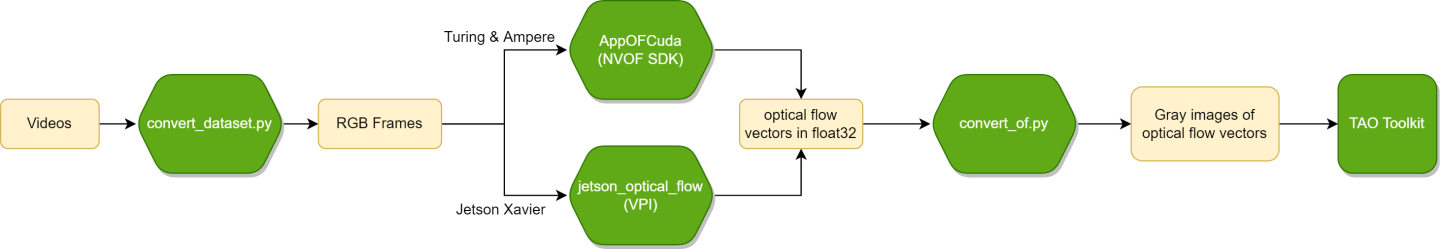

ActionRecognitionNet requires RGB video frames for the RGB input stream and optical flow vectors for the OF input stream. The x-axis and y-axis of the raw optical flow vectors should be mapped to grayscale images for training. We provide a simple tool to preprocess sample. This tool will convert the video to frames and generate optical flow images based on the NVIDIA Optical Flow (NVOF) SDK.

The data should be organized in the following structure:

/Dataset

/class_a

/video_1

/rgb

000000.png

000001.png

...

N.png

/u

000000.jpg

000001.jpg

...

N-1.jpg

/v

000000.jpg

000001.jpg

...

N-1.jpg

The root directory of dataset contains multiple sub-directories for different classes. Each class directory has sub-folders for different videos, and each of these subfolders contain rgb, u and v folders that respectively hold RGB frames, optical flow x-axis grayscale images, and optical flow y-axis grayscale images. The u and v folders can be empty if you want to train an RGB-only model. A simple script is provided to generate RGB frames only.

The common data process pipeline can be depicted with the following diagrams:

RGB-Only data process pipeline

OF-Only data process pipeline

The spec file for ActionRecognitionNet includes model,

train, and dataset parameters. Here is an example spec for

training a 3D RGB-only model with a resnet18 backbone on a dataset that contains 5 classes:

“walk”, “sits”, “squat”, “fall”, “bend”:

model:

model_type: rgb

backbone: resnet18

rgb_seq_length: 3

input_type: 3d

sample_rate: 1

dropout_ratio: 0.0

dataset:

train_dataset_dir: /data/train

val_dataset_dir: /data/test

label_map:

walk: 0

sits: 1

squat: 2

fall: 3

bend: 4

output_shape:

- 224

- 224

batch_size: 32

workers: 8

clips_per_video: 15

augmentation_config:

train_crop_type: no_crop

horizontal_flip_prob: 0.5

rgb_input_mean: [0.5]

rgb_input_std: [0.5]

val_center_crop: False

train:

optim:

lr: 0.01

momentum: 0.9

weight_decay: 0.0001

lr_scheduler: MultiStep

lr_steps: [5, 15, 25]

lr_decay: 0.1

num_epochs: 30

checkpoint_interval: 1

| Parameter | Data Type | Default | Description |

model |

dict config | – | The configuration of the model architecture |

train |

dict config | – | The configuration of the training process |

dataset |

dict config | – | The configuration for the dataset |

model

The model parameter provides options to change the ActionRecognitionNet architecture.

model:

model_type: rgb

backbone: resnet18

rgb_seq_length: 3

input_type: 3d

sample_rate: 1

dropout_ratio: 0.0

| Parameter | Datatype | Default | Description | Supported Values |

model_type |

string | joint | The type of model, which can be rgb for the RGB-only model, of for the OF-only model, or joint for the RGB+OF model | rgb/of/joint |

backbone |

string | resnet18 | The backbone of the model. Currently supported backbones are ResNet18/34/50/101 | resnet18/34/50/101 |

input_type |

string | 2d | The type of input for the model. It can be 2d or 3d. | 2d/3d |

rgb_seq_length |

unsigned int | 3 | The number of RGB frames for single inference | >0 |

rgb_pretrained_model_path |

string | None | The absolute path to pretrained weights for the RGB model | |

rgb_pretrained_num_classes |

unsigned int | 0 | The number of classes for the pretrained RGB model. Use 0 to specify the same number of classes as the current training. | >=0 |

of_seq_length |

unsigned int | 10 | The number of optical flow frames for single inference | >0 |

of_pretrained_model_path |

string | None | The absolute path to pretrained weights for the OF model | |

of_pretrained_num_classes |

unsigned int | 0 | The number of classes for the pretrained RGB model. Use 0 to specify the same number of classes as the current training. | >=0 |

joint_pretrained_model_path |

string | None | The absolute path to pretrained weights for the joint model | |

num_fc |

unsigned int | 64 | The number of hidden units for the joint model | >0 |

sample_rate |

unsigned int | 1 | The sample rate to pick consecutive frames. For example, if the sample_rate is 2, the frame will be picked every 2 frames. | >0 |

dropout_ratio |

float | 0.5 | The probability to drop out hidden units | 0.0 ~ 1.0 |

train

The train parameter defines the hyperparameters of the training process.

train:

optim:

lr: 0.01

momentum: 0.9

weight_decay: 0.0001

lr_scheduler: MultiStep

lr_steps: [5, 15, 25]

lr_decay: 0.1

num_epochs: 30

checkpoint_interval: 1

| Parameter | Datatype | Default | Description | Supported Values |

optim |

dict config | The config for SGD optimizer, including the learning rate, learning scheduler, and weight decay | >0 | |

num_epochs |

unsigned int | 10 | The total number of epochs to run the experiment | >0 |

checkpoint_interval |

unsigned int | 5 | The interval at which the checkpoints are saved | >0 |

clip_grad_norm |

float | 0.0 | The amount to clip the gradient by the L2 norm. 0.0 means don’t clip | >=0 |

optim

The optim parameter defines the config for the SGD optimizer in training, including the

learning rate, learning scheduler, and weight decay.

optim:

lr: 0.01

momentum: 0.9

weight_decay: 0.0001

lr_scheduler: MultiStep

lr_steps: [5, 15, 25]

lr_decay: 0.1

Parameter |

Datatype |

Default |

Description |

Supported Values |

|---|---|---|---|---|

lr |

float | 5e-4 | The initial learning rate for the training | >0.0 |

momentum |

float | 0.9 | The momentum for the SGD optimizer | >0.0 |

weight_decay |

float | 5e-4 | The weight decay coefficient | >0.0 |

|

|

string |

MultiStep |

The learning scheduler. Two schedulers are provided: |

MultiStep/AutoReduce |

lr_decay |

float | 0.1 | The decreasing factor for learning rate scheduler | >0.0 |

lr_steps |

int list | [15, 25] | The steps to decrease the learning rate for the MultiStep scheduler |

int list |

lr_monitor |

string | val_loss | The monitor value for the AutoReduce scheduler |

val_loss/train_loss |

patience |

unsigned int | 1 | The number of epochs with no improvement, after which learning rate will be reduced | >0 |

min_lr |

float | 1e-4 | The minimum learning rate in the training | >0.0 |

dataset

The dataset parameter defines the dataset source, training batch size, and

augmentation.

dataset:

train_dataset_dir: /data/train

val_dataset_dir: /data/test

label_map:

walk: 0

sits: 1

squa: 2

fall: 3

bend: 4

output_shape:

- 224

- 224

batch_size: 32

workers: 8

clips_per_video: 15

augmentation_config:

train_crop_type: no_crop

horizontal_flip_prob: 0.5

rgb_input_mean: [0.5]

rgb_input_std: [0.5]

val_center_crop: False

| Parameter | Datatype | Default | Description | Supported Values |

train_dataset_dir |

string | The path to the train dataset | ||

val_dataset_dir |

string | The path to the validation dataset | ||

label_map |

dict | A dict that maps the class names to indices | ||

output_shape |

list | [224, 224] | The output shape after augmentation | unsigned int list with size=2 |

batch_size |

unsigned int | 32 | The batch size for training and validation | >0 |

workers |

unsigned int | 8 | The number of parallel workers processing data | >0 |

clips_per_video |

unsigned int | 1 | The number of clips sampled from a video in an epoch | >0 |

augmentation_config |

dict config | The parameters to define the augmentation method |

For a 3D model, the input layout is NCDHW, where N is the batch size, C

is the input channel, D is the depth or sequence length, H is the image height,

and W is the image width.

For a 2D model, the input layout is N[CxD]HW.

augmentation_config

The augmentation_config parameter contains hyperparameters for augmentation.

augmentation_config:

train_crop_type: no_crop

horizontal_flip_prob: 0.5

rgb_input_mean: [0.5]

rgb_input_std: [0.5]

val_center_crop: False

| Parameter | Datatype | Default | Description | Supported Values |

|

|

string |

random_crop |

The crop type when training: |

random_crop |

scales |

float list | [1.0] | The scales to generate the crop pattern in multi_scale_crop |

float list / >0.0 |

rgb_input_mean |

float list | [0.485, 0.456, 0.406] | The input mean for RGB frames: (input - mean) / std | float list / size=1 or 3 |

rgb_input_std |

float list | [0.229, 0.224, 0.225] | The input std for RGB frames: (input - mean) / std | float list / size=1 or 3 |

of_input_mean |

float list | [0.5] | The input mean for OF frames: (input - mean) / std | float list / size=1 or 3 |

of_input_rgb |

float list | [0.5] | The input std for OF frames: (input - mean) / std | float list / size=1 or 3 |

val_center_crop |

bool | False | Specifies whether to center crop the images in validation. | |

|

|

unsigned int |

256 |

Specifies whether to resize the images, with crop_smaller_edge

applied to the short side before random_crop in training or

center_crop in validation. |

>0 |

Use the following command to run ActionRecognitionNet training:

tao model action_recognition train -e <experiment_spec_file>

-r <results_dir>

-k <key>

[train.gpu_ids=<gpu id list>]

[train.resume_training_checkpoint_path=<absolute path to \*.tlt checkpoint>]

Required Arguments

-e, --experiment_spec_file: The path to the experiment spec file-r, --results_dir: The path to a folder where the experiment outputs should be written-k, --key: The user-specific encoding key to save or load a.tltmodel.

Optional Arguments

train.gpu_ids: The GPU indices list for training. If you set more than one GPU ID in it, multi-GPU training will be triggered automatically.train.resume_training_checkpoint_path: The path to a checkpoint to continue training

Here’s an example of using the ActionRecognitionNet training command:

tao model action_recognition train -e $DEFAULT_SPEC -r $RESULTS_DIR -k $YOUR_KEY

The evaluation metric of ActionRecognitionNet is recognition accuracy. Two modes of video sampling

strategies are provided for evaluation on a video: center and conv.

The center evaluation inference is performed on the middle part of frames in a video clip.

For example, if the model requires 32 frames as input and a video clip has 128 frames, then

the frames from index 48 to index 79 will be used to perform inference.

The conv evaluation inference is performed on a number of segments out of a video clip. For

example, a video clip is divided uniformly into 10 parts; the center of each segments is treated as

a starting point from which 32 consecutive frames are chosen to form an inference segment. In this

manner, an inference segment is generated for every part the video was divided into. And the final

label of the video is determined by the average score of those 10 segments.

Use the following command to run ActionRecognitionNet evaluation:

tao model action_recognition evaluate -e <experiment_spec_file>

-k <key>

evaluate.checkpoint=<model to be evaluated>

[evaluate.gpu_id=<gpu index>]

[evaluate.batch_size=<batch size>]

[evaluate.test_dataset_dir=<path to test dataset>]

[evaluate.video_eval_mode=<evaluation mode for the video>]

[evaluate.video_num_segments=<number of segments for :code:`conv` mode>]

Required Arguments

-e, --experiment_spec_file: THe xperiment spec file to set up the evaluation experiment. This should be the same as a training spec file.-k, --key:The encoding key for the.tltmodel.evaluate.checkpoint: The.tltmodel.

Optional Arguments

evaluate.gpu_id: The GPU index used to run the evaluation. You can specify the GPU index used to run evaluation when the machine has multiple GPUs installed. Note that evaluation can only run on a single GPU.evaluate.batch_size: The batch size to perform inference in evaluation. The default value is 1.evaluate.test_dataset_dir: The path to the test dataset. If not set, the validation dateset in theexperiment_specwill be used.evaluate.video_eval_mode: The evaluation mode for the video:center: Evaluation inference is performed on the middle part of frames in the video clip. This is the default mode.conv: Evaluation inference is performed on a number of segments out of a video clip. The final prediction is averaged among all the segments.

evaluate.video_num_segments: The number of segments sampled in a video clip forconvevaluation mode. The default value is 10.

Here’s an example of using the ActionRecognitionNet evaluation command:

tao model action_recognition evaluate -e $DEFAULT_SPEC -k $YOUR_KEY evaluate.checkpoint=$TRAINED_TLT_MODEL

Use the following command to run inference on ActionRecognitionNet with the .tlt model.

tao model action_recognition inference -e <experiment_spec>

-k <key>

inference.checkpoint=<inference model>

inference.inference_dataset_dir=<path to dataset to be inferenced>

[inference.gpu_id=<gpu index>]

[inference.batch_size=<batch size>]

[inference.video_inf_mode=<inference >]

[inference.video_num_segments]

The output will be formatted as [video_sample_path] [labels list of inference segments in this video].

Required Arguments

-e, --experiment_spec: The experiment spec file to set up inference. This can be the same as the training spec.-k, --key:The encoding key for the.tltmodel.inference.checkpoint: The.tltmodel to perform inference with.inference.inference_dataset_dir: The path to the dataset to perform inference with. It should be a class-level directory, as described in the Preparing the Dataset section.

Optional Arguments

inference.gpu_id: The GPU index used to run the inference. You can specify the GPU index used to run inference when the machine has multiple GPUs installed. Note that inference can only run on a single GPU.inference.batch_size: The batch size to perform inference in evaluation. The default value is 1.inference.video_inf_mode: The inference mode for the video:center: Inference is performed on the middle part of frames in the video clip. This is the default mode.conv: Inference is performed on a number of segments in a video clip. All the segment preidctions will be kept in a label list.

inference.video_num_segments: The number of segments sampled in a video clip for theconvinference mode.

Here’s an example ActionRecognitionNet inference command:

tao model action_recognition inference -e $DEFAULT_SPEC -k $KEY

inference.checkpoint=$TLT_TRAINED_MODEL

inference.inference_dataset_dir=$PATH_TO_INF_DATASET

The expected output for the fall class would be as follows:

/path/to/fall/video_1 [fall]

/path/to/fall/video_2 [fall]

...

Use the following command to export ActionRecognitionNet to .etlt format for deployment:

tao model action_recognition export -k <key>

-e <experiment_spec>

export.checkpoint=<tlt checkpoint to be exported>

[export.gpu_id=<gpu index>]

[export.onnx_file=<path to exported file>]

Required Arguments

-e, --experiment_spec: The experiment spec file to set up export. This can be the same as the training spec.-k, --key:The encoding key for the.tltmodel.export.checkpoint: The.tltmodel to be exported.

Optional Arguments

export.gpu_id: The GPU index used to run the export. We can specify the GPU index used to run export when the machine has multiple GPUs installed. Note that export can only run on a single GPUexport.onnx_file: The path to save the exported model to. The default path is in the same directory of\*.tltmodel.

Here’s an example of using the ActionRecognitionNet export command:

tao model action_recognition export -e $DEFAULT_SPEC -k $YOUR_KEY model=$TLT_TRAINED_MODEL

The deep learning and computer vision models that you trained can be deployed on edge devices,

such as a Jetson Xavier, Jetson Nano, or Tesla, or in the cloud with NVIDIA GPUs. The exported

\*.etlt model can be used in a stand-alone TensorRT inference sample or in DeepStream.

DeepStream SDK is a streaming analytic toolkit to accelerate building AI-based video analytic applications. TAO Toolkit is integrated with DeepStream SDK, so models trained with TAO Toolkit will work out of the box with Deepstream.

Deploying the ActionRecognitionNet in the DeepStream sample

Once you get the .etlt ActionRecognitionNet model, you can deploy it into the DeepStream

3d-action-recognition sample app. Refer to the sample applications documentation for detailed

steps to run action recogintion in DeepStream.

Running ActionRecognitionNet Inference on the Stand-Alone Sample

A stand-alone TensorRT inference sample is also provided. It consumes the TensorRT engine and supports running with 2D/3D input on images. The sample can be found on Github.

To use this sample, you need to generate the TensorRT engine out of a \*.etlt model using

trtexec.

Using

trtexec

For instructions on generating TensorRT engine using trtexec command, refer to trtexec guide for ActionRecognitionNet.

Usage of inference sample

Once you get the tensorrt engine, you can deploy the engine in the stand-alone sample. Use the following command to run inference:

python ar_trt_inference.py --input_images_folder <path to input images folder> \

--trt_engine <path to tensorrt engine> \

[--center_crop] \

[--input_2d]

Required Arguments

--input_images_folder: The path to input images folder. It should be avideo_<n>level directory as described in the Preparing the Dataset section.--trt_engine: The path to the TensorRT engine.

Optional Arguments

--center_crop: Resizes the input images with a short side to 256 and center crops to a 224x224 area. If this flag is not set, the input images will be directly resized to 224x224.--input_2d: Set this flag if the engine is generated from a 2D model.

The script will do inference on the images in the folder through a 32-len sliding window with stride 1, which means the inference will be done on sequences as follows:

[frame_0, frame_1, frame_2, ..., frame_31]

[frame_1, frame_2, frame_3, ..., frame_32]

....