Visual ChangeNet-Segmentation

The Visual ChangeNet-Segmentation model outputs semantic change segmentation masks given two images as input and detects pixel-level change maps between two given images. Given a test image and a ‘golden’ reference image, the semantic segmentation change mask is comprised of every pixel that has changed between the two input images.

Visual ChangeNet is a state of the art transformer-based Change Detection model. Visual ChangeNet is also based on Siamese Network, which is a class of neural network architectures containing two or more identical subnetworks. In TAO, Visual ChangeNet supports two images as input where the end goal is to either classify or segment the change between the “golden or reference” image and the “test” image. TAO supports the FAN backbone network for both Visual ChangeNet architectures.

TAO Toolkit versions 5.3 and later support some of the foundational models for change detection (classification and segmentation). NV-DINOv2 can now be used as the backbone for the Visual ChangeNet-Classification and Segmentation models.

To mitigate the inferior performance of a standard vision transformer (ViT) on dense prediction tasks, TAO supports the ViT-Adapter_ architecture. This allows a powerful ViT that has learned rich semantic representations from a large corpus of data to achieve comparable performance to vision-specific transformers on dense preidiction tasks.

In TAO, two different types of Change Detection networks are supported: Visual ChangeNet-Segmentation and Visual ChangeNet-Classification intended for segmentation and classification of change between the two input images, respectively. Visual ChangeNet-Segmentation is specifically intended for change segmentation.

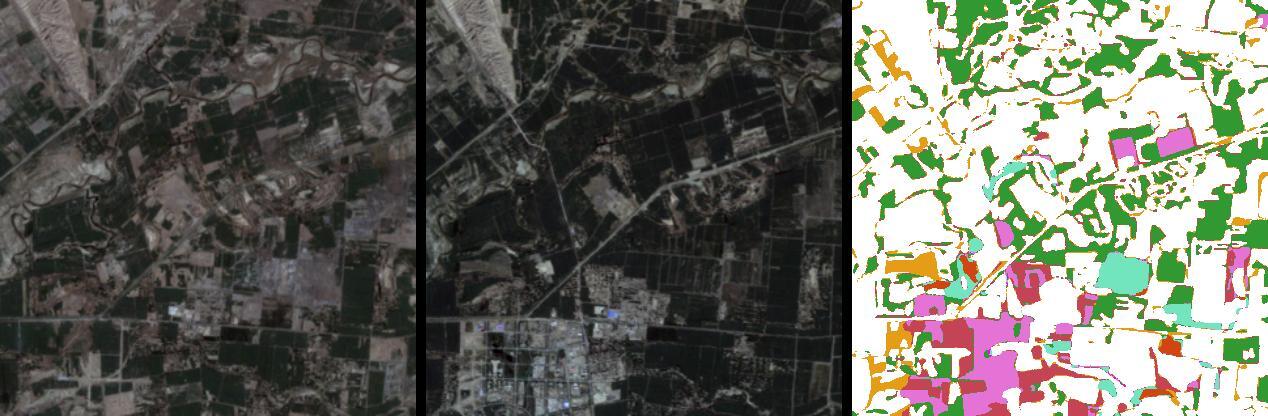

Here is a sample image for pre-change (golden image) and post-change (test image) along with ground-truth segmentation change map side-by-side from the LandSat-SCD dataset:

The training algorithm optimizes the VisualChangeNet network to localize the change between a test image and a golden reference image. This model was trained using the VisualChangeNet-Segmentation training app in the TAO Toolkit v5.1.

The primary use case for this model is defect detection for automated optical inspection (AOI). Other use-cases include detecting change localization in remote sensing imagery.

The datasheet for the model is captured in its model card. We support two model cards for Visual ChangeNet-Segmentation:

Research-only model is hosted at Visual ChangeNet-Segmentation (Research-only).

Commercial model is hosted at Visual ChangeNet-Segmentation (Commercial).